Chapter 1 Introduction

The central idea we will explore in this book is how to use Bayes’ theorem to quantify uncertainty about our belief regarding a scientific question of interest, given some data. Before we delve into the details of the underlying theory and its application, it is important to have some familiarity with the following topics: basic concepts of probability, the concept of random variables, probability distributions, and the concept of likelihood. Therefore, we will begin with these topics.

Some of these concepts might seem abstract at first, but they are very relevant for conducting a Bayesian analysis. When reading this book for the first time, it might be helpful to do a quick pass through this chapter and return to it as needed while progressing through the rest of the book.

1.1 Probability

Informally, we all understand what the term probability means. We routinely talk about things like the probability of it raining today. However, there are two distinct ways to think about probability. One can think of the probability of something happening with reference to the frequency with which it might occur in repeated observations. Such a conception of probability is easy to imagine in cases where something can, at least in principle, occur repeatedly.

An example would be obtaining a 6 when tossing a die again and again. However, this frequentist view of probability is difficult to justify when talking about one-of-a-kind things, such as earthquakes; here, probability is expressing our uncertainty about the earthquake happening.

Both the frequency-based and the uncertain-belief perspective have their place in statistical inference, and depending on the situation, we are going to rely on both ways of thinking.

The statements below are not formal definitions of the axioms of probability theory; for more details (and more precise formulations), see Blitzstein and Hwang (2014), Ross (2002), Kerns (2014), Resnick (2019), or Kolmogorov (1933) (among many other books). Keep in mind that different textbooks have slightly different ways of presenting the underlying structure of what constitutes a probability space (defined below).

The probability of something happening is defined to be constrained in the way described below. A concrete example of “something happening” is an outcome–call it \(\omega\)–such as obtaining nine correct (\(c\)) answers and one incorrect (\(i\)) when we ask a subject \(10\) yes-no questions (say, about the meaning of a sentence). An example would be a sequence of correct (\(c\)) and incorrect (\(i\)) answers, such as \(iiiiciiiii\). Another possible outcome is \(cciicicici\). The outcomes are thus all possible sequences of correct and incorrect answers, and the sample space, \(\Omega\), is the set of all possible outcomes.

Another important concept is events, \(E\), next; the event corresponding to obtaining one correct answer when \(10\) questions are asked, is the subset of outcomes \(\{ciiiiiiiii,iciiiiiiii,iiciiiiiii,\ldots\}\); in other words, the event corresponding to obtaining one correct answer is a set containing \(10\) elements. Similarly, the event corresponding to obtaining nine correct answers when \(10\) questions are asked is the subset of outcomes \(\{ccccccccci,ccccccccic,\ldots \}\).

When we conduct an experiment, if we get a particular outcome like \(ciiiiiiiii\), then we say that the event \(\{ciiiiiiiii,iciiiiiiii,iiciiiiiii,\ldots\}\) occurred. A probability space involves two more key ingredients: A collection of subsets of \(\Omega\) denoted by \(F\) and called the event space;1 and a real-valued function named \(P\) that assigns a number (a probability) to each set in \(F\).

Table 1.1 summarizes the notation.

| Symbol | Name | Brief explanation | Example |

|---|---|---|---|

| \(\Omega\) | Sample space | The set of all possible outcomes | All possible sequences of correct (c) or incorrect (i) answers of length 10; that is \(2^{10}=1024\) sequences. |

| \(F\) | Event space | A collection of subsets of \(\Omega\) | All sequences with exactly 1 correct answer, or exactly 9 correct answers, etc. |

| \(P\) | Probability fun. | Assigns probabilities to events in \(F\) | If each question is answered correctly with probability \(\theta\), \(P(\{ciiiiiiiii\}) = (1-\theta)^9\theta\). |

| \(\omega\) | Outcome | An element of \(\Omega\) | The particular sequence \(ciiiiiiiii\). |

| \(E\) | Event | A subset of \(\Omega\) | A collection of sequences \(\{ciiiiiiiii, iciiiiiiii, iiciiiiiii, \ldots\}\). |

The probability axioms refer to the sample space \(\Omega\), event space \(F\), and probability \(P\) as follows:

- For every event \(E\) in the event space \(F\), the probability \(P(E)\) is a real number between \(0\) and \(1\).

- The event \(E=\Omega\) belongs to \(F\), and \(P(\Omega)=1\).

- If the events \(A_1, A_2, A_3,...\) are mutually exclusive (in other words, if no two of these subsets of \(\Omega\) overlap), then the probability of the event “one of \(A_1\) or \(A_2\) or \(A_3\) or …” is given by the sum of the probability of \(A_1\) occurring, of \(A_2\) occurring, of \(A_3\) occurring, … (this sum could be finite or infinite).

Together, the triplet \((\Omega, F, P)\) is called a probability space.

In the context of data analysis, we will talk about probability in the following way. Consider some data that we might have collected. This could be discrete \(0,1\) responses in a question-response accuracy task, or continuous measurements of reading times in milliseconds from an eyetracking study, or multi-category responses like “yes”, “no”, or “don’t know”, or electrical potentials on the microvolts scale.

In any such case, we will say that the data are being generated from a random variable, which we will designate with a capital letter such as \(Y\).2

The actually observed outcome (a \(0,1\) response; reading time, a response like “yes”, “no”, “don’t know”, etc.) will be distinguished from the random variable that generated it by using lower case \(y\) for the observed outcome. We can call \(y\) an instance of \(Y\); every new observed outcome can be different due to random variability.

We can summarize the above informal concepts relating to random variables very compactly if we re-state them in mathematical form. A mathematical statement has the advantage not only of brevity but also of reducing (and hopefully eliminating) ambiguity.

So, stating the definition of random variables formally (following Blitzstein and Hwang 2014), we define a random variable \(Y\) as a function from a sample space \(\Omega\) of possible outcomes \(\omega\) to the real number system:3

\[\begin{equation} Y : \Omega \rightarrow \mathbb{R} \end{equation}\]

The random variable associates to each outcome \(\omega \in \Omega\) exactly one number \(Y(\omega) = y\). The number \(y\) is a variable that represents all the possible values that the random variable generates; these values are taken to belong to the support of the random variable \(Y\): \(y \in S_Y\).

In our running example of asking \(10\) questions and obtaining a correct or incorrect response in each of the \(10\) trials, \(Y(ciiiiiiiii) = 1\), \(Y(cciiiiiiii) = 2\), etc. We will say that the number of correct responses from a subject is generated from a random variable \(Y\). Because in our running example only discrete responses are possible (the number of correct responses can be \(0, 1, 2, \ldots, 10\)), this is an example of a discrete random variable.

This particular random variable \(Y\) will be assumed to have a parameter \(\theta\) that represents the probability of producing a particular number of correct responses (as discussed below, you will see that the random variable in question is the binomial random variable). Given some observed data that is assumed to come from a particular distribution, typically our goal is to obtain an estimate of the (unknown) value of the parameter associated with that distribution. More generally, if there is more than one parameter involved in the distribution, then our goal is to obtain an estimate of these parameters.

Now, suppose that in our above example, the random variable \(Y\) gives us exactly one correct answer; this can be (somewhat sloppily) be written as \(Y=1\). The outcomes that could produce \(1\) are any of this set of ten possible outcomes: \(ciiiiiiiii\), \(iciiiiiiii\), \(iiciiiiiii\), \(iiiciiiiii\),…. The set \(\{ciiiiiiiii,iciiiiiiii,iiciiiiiii,iiiciiiiii,...\}\) is an element of \(F\), so it is an event. The number \(y=1\) thus represents this event, and will have a probability of occurring associated with it; this is defined next.

A discrete random variable \(Y\) has associated with it a function called a probability mass function or PMF. This function, which is written \(p(y)\), gives us the probability of obtaining each of these \(11\) possible values (from 0 correct responses to 10). We are using lower-case \(p(y)\) here to denote a function of \(y\). When we want to talk about the probability of observing \(y\), we will use \(P(y)\). In discrete random variables the value \(p(y)\) will be the same as \(P(y)\); but when we turn to continuous random variables, this equality will not hold.

We will write that this PMF \(p(y)\) depends on, or is conditional on, a particular fixed but unknown value for \(\theta\); the PMF will be written \(p(y|\theta)\).4

In frequentist approaches to data analysis, only the observed data \(y\) are used to draw inferences about \(\theta\). A typical question that we ask in the frequentist paradigm is: does \(\theta\) have a particular value \(\theta_0\)? One can obtain estimates of the unknown value of \(\theta\) from the observed data \(y\), and then draw inferences about how different–or more precisely how far away–this estimate is from the hypothesized \(\theta_0\). This is the essence of null hypothesis significance testing. The conclusions from such a procedure are framed in terms of either rejecting the hypothesis that \(\theta\) has value \(\theta_0\), or failing to reject this hypothesis. Here, rejecting the null hypothesis is the primary goal of the statistical hypothesis test.

Bayesian data analysis begins with a different question. What is common to the frequentist paradigm is the assumption that the data are generated from a random variable \(Y\) and that there is a function \(p(y|\theta)\) that depends on the parameter \(\theta\). Where the Bayesian approach diverges from the frequentist one is that an important goal is to express our uncertainty about \(\theta\). In other words, we treat the parameter \(\theta\) itself as a random variable, which means that we assign a probability distribution \(p(\theta)\) to this random variable. This distribution \(p(\theta)\) is called the prior distribution on \(\theta\); such a distribution could express our belief about the probability of correct responses, before we observe the data \(y\).

In later chapters, we will spend some time trying to understand how such a prior distribution can be defined for a range of different research problems.

Given such a prior distribution and some data \(y\), the end-product of a Bayesian data analysis is what is called the posterior distribution of the parameter (or parameters) given the data: \(p(\theta | y)\). This posterior distribution is the probability distribution of \(\theta\) after conditioning on \(y\), i.e., after the data has been observed and is therefore known. All our statistical inference is based on this posterior distribution of \(\theta\); we can even carry out hypothesis tests that are analogous (but not identical) to the likelihood ratio based frequentist hypothesis tests.

We already mentioned conditional probability above when discussing the probability of the data given some parameter \(\theta\), which we wrote as the PMF \(p(y|\theta)\). Conditional probability is an important concept in Bayesian data analysis, not least because it allows us to derive Bayes’ theorem. Let’s look at the definition of conditional probability next.

1.2 Conditional probability

Suppose that \(A\) stands for some discrete event; an example would be “the streets are wet.” Suppose also that \(B\) stands for some other discrete event; an example is “it has been raining.” We can talk about the probability of the streets being wet given that it has been raining; or more generally, the probability of \(A\) given that \(B\) has happened.

This kind of statement is written as \(Prob(A|B)\) or more simply \(P(A|B)\). This is the conditional probability of \(A\) given \(B\). Conditional probability is defined as follows.

\[\begin{equation} P(A|B)= \frac{P(A,B)}{P(B)} \hbox{ where } P(B)>0 \end{equation}\]

The conditional probability of A given B is thus defined to be the joint probability of A and B, divided by the probability of B. We can rearrange the above equation so that we can talk about the joint probability of both events \(A\) and \(B\) happening. This joint probability can be computed by first taking \(P(B)\), the probability that event \(B\) (it has been raining) happens, and multiplying this by the probability that \(A\) happens conditional on \(B\), i.e., the probability that the streets are wet given it has been raining. This multiplication will give us \(P(A,B)\), the joint probability of \(A\) and \(B\), i.e., that it has been raining and that the streets are wet. We will write the above description as: \(P(A,B)=P(A|B)P(B)\).

Now, since the probability \(A\) and \(B\) happening is the same as the probability of \(B\) and \(A\) happening, i.e., since \(P(B,A)=P(A,B)\), we can equate the expansions of these two terms:

\[\begin{equation} P(A,B) = P(A|B) P(B) \hbox{ and } P(B,A) = P(B|A)P(A) \end{equation}\]

Equating the two expansions, we get:

\[\begin{equation} P(A|B) P(B) = P(B|A)P(A) \end{equation}\]

Dividing both sides by \(P(B)\):

\[\begin{equation} P(A|B)=\frac{P(B|A)P(A)}{P(B)} \end{equation}\]

The above statement is Bayes’ rule, and is the basis for all the statistical inference we will do in this book.

1.3 The law of total probability

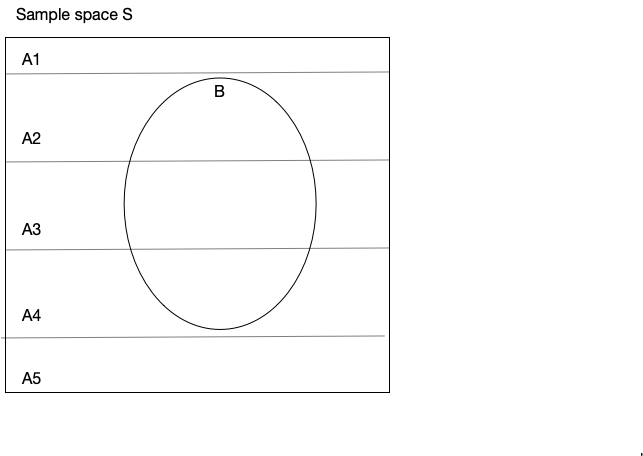

Related to the above discussion of conditional probability is the law of total probability. Suppose that we have \(A_1,\dots,A_n\) distinct events that are pairwise disjoint which together make up the entire sample space \(\Omega\); see Figure 1.1. Then, \(P(B)\), the probability of \(B\) happening, will be the sum of the probabilities \(P(B\cap A_i)\), i.e., the sum of the joint probabilities of \(B\) and each \(A\) occurring (the symbol \(\cap\) is the “and” operator used in set theory). The use of \(\cap\) to represent joint probability is just an alternative notation to the one we used earlier (\(P(B,A_i)\)); you may see both notational variants in textbooks.

Formally:

\[\begin{equation} P(B) = \sum_{i=1}^n P(B \cap A_i) \end{equation}\]

Because of the conditional probability rule, we can rewrite this as:

\[\begin{equation} P(B) = \sum_{i=1}^n P(B | A_i) P(A_i) \end{equation}\]

Thus, the probability of \(B\) is the sum of the conditional probabilities \(P(B|A_i)\) weighted by the probability \(P(A_i)\). We will see the law of total probability in action below when we talk about marginal likelihood.

FIGURE 1.1: An illustration of the law of total probability.

For now, this is all the probability theory we need to know!

The next sections expand on the idea of a random variable, the probability distributions associated with the random variable, what it means to specify a prior distribution on a parameter, and how the prior and data can be used to derive the posterior distribution of \(\theta\).

To make the discussion concrete, we will use an example of a discrete random variable, the binomial. After discussing this discrete random variable, we present another example, this time involving a continuous random variable, the normal random variable.

The binomial and normal cases serve as the canonical examples that we will need in the initial stages of this book. We will introduce other random variables as needed: in particular, we will need the uniform and beta distributions. In other textbooks, you will encounter distributions like the Poisson, gamma, and the exponential. The most commonly used distributions and their properties are discussed in most textbooks on statistics (see Further Reading at the end of this chapter).

1.4 Discrete random variables: An example using the binomial distribution

Consider the following sentence:

“It’s raining, I’m going to take the ….”

Suppose that our research goal is to estimate the probability, call it \(\theta\), of the word “umbrella” appearing in this sentence, versus any other word. If the sentence is completed with the word “umbrella”, we will refer to it as a success; any other completion will be referred to as a failure. This is an example of a binomial random variable: given \(n\) trials, there can be only two possible outcomes in each trial, a success or a failure, and there is some true unknown probability \(\theta\) of success that we want to estimate. When the number of trials \(n\) is one, the random variable is called a Bernoulli distribution. So the Bernoulli distribution is the binomial distribution with the number of trials \(n=1\).

One way to empirically estimate this probability of success is to carry out a cloze task. In a cloze task, subjects are asked to complete a fragment of the original sentence, such as “It’s raining, I’m going to take the …”. The predictability or cloze probability of “umbrella” is then calculated as the proportion of times that the target word “umbrella” was produced as an answer by subjects.

Assume for simplicity that \(10\) subjects are asked to complete the above sentence; each subject does this task only once. This gives us \(10\) independent trials that are either coded a success (“umbrella” was produced) or as a failure (some other word was produced). We can sum up the number of successes to calculate how many of the \(10\) trials had “umbrella” as a response. For example, if \(8\) instances of “umbrella” are produced in \(10\) trials, we would estimate the cloze probability of producing “umbrella” to be \(8/10\).

We can repeatedly generate simulated sequences of the number of successes in R (later on we will demonstrate how to generate such random sequences of simulated data). Here is a case where we carry out \(20\) experiments, and each experiment will have \(10\) trials.

Before we look at the R code for generating such simulated data, some notational conventions are needed to avoid confusion. In the function we use below (rbinom()) for generating simulated data, n refers to the number of experiments, which in the R function’s terminology is the number of observations. By contrast, size is the number of trials. This means that in the example below, n refers to the number of experiments conducted; because in R-speak these are called observations, we are also going to adopt this terminology. So, if \(20\) experiments are done, each with \(10\) trials, we will say that we have \(20\) observations, each with \(10\) trials.

## n=the number of observations (no. of experiments)

## size=the number of trials in each observation

rbinom(n = 20, size = 10, prob = 0.5)## [1] 3 8 5 5 5 2 10 6 6 6 6 5 3 6 2 5 6 5 5 6The number of successes in each of the \(20\) simulated observations above is being generated by a discrete random variable \(Y\) with a probability distribution \(p(y|\theta)\) called the binomial distribution.

For discrete random variables such as the binomial, the probability distribution \(p(y|\theta)\) is called a probability mass function (PMF). The PMF defines the probability of each possible event. In the above example, with the number of trials \(\hbox{size}=10\), there are in principle \(11\) possible events that could produce \(y=0,1,2,...,10\) successes. Which of these is most probable depends on the parameter \(\theta\) in the binomial distribution that represents the probability of success.

The left-hand side plot in Figure 1.2 shows an example of a binomial PMF with \(10\) trials, with the parameter \(\theta\) fixed at \(0.5\). Setting \(\theta\) to \(0.5\) leads to a PMF where the most probable outcome is \(5\) successes out of \(10\). If we had set \(\theta\) to, say \(0.1\), then the most probable outcome would be \(1\) success out of \(10\); and if we had set \(\theta\) to \(0.9\), then the most probable outcome would be \(9\) successes out of \(10\).

FIGURE 1.2: Probability mass functions of a binomial distribution assuming 10 trials, with 50%, 10%, and 90% probability of success.

The probability mass function for the binomial is written as follows. Here, it is again important to pay attention to the notation, as there is potential for confusion given the conventions in the rbinom function we saw earlier.

\[\begin{equation} \mathit{Binomial}(k|n,\theta) = \binom{n}{k} \theta^{k} (1-\theta)^{n-k} \end{equation}\]

Here, \(n\) represents the total number of trials,

and corresponds to size in the rbinom function; the confusion that can arise here is that in the rbinom function n is used to represent the number of observations (or the number of experiments). It is important to remember that in the definition of the probability mass function above, \(n\) represents the total number of trials in a single observation (or experiment).

The variable \(k\) the number of successes (this could range from \(0\) to \(10\) in our running example), and \(\theta\) the probability of success. The term \(\binom{n}{k}\), pronounced n-choose-k, represents the number of ways in which one can obtain \(k\) successes in an experiment with \(n\) trials. For example, \(1\) success out of \(10\) can occur in \(10\) possible ways: the very first observation could be a \(1\), or the second observation could be a \(1\), etc.

The term \(\binom{n}{k}\) expands to \(\frac{n!}{k!(n-k)!}\). In R, it is computed using the function choose(n,k), with \(n\) and \(k\) representing any real number (although of course, the choose() function only makes sense for positive integers).

When we want to express the fact that the data is assumed to be generated from a binomial random variable, we will write \(Y \sim \mathit{Binomial}(n,\theta)\). If the data is generated from a random variable that has some other probability distribution \(f(\theta)\), we will write \(Y\sim f(\theta)\). In this book, we use \(f(\cdot)\) synonymously with \(p(\cdot)\) to represent a probability density/mass function.

1.4.1 The mean and variance of the binomial distribution

It is possible to analytically compute the mean (expectation) and variance of the PMF associated with the binomial random variable \(Y\).

The expectation of a discrete random variable \(Y\) with probability mass function f(y), is defined as

\[\begin{equation} E[Y] = y_1 \cdot f(y_1) + \dots + y_n \cdot f(y_n) = \sum_{i=1}^n y_i \cdot f(y_i) \end{equation}\]

As a simple example, suppose that we toss a fair coin once. The possible events are Tails (represented as \(y_1 = 0\)) and Heads (represented as \(y_2 = 1\)), each with equal probability, 0.5. The expectation is:

\[\begin{equation} E[Y] = \sum_{i=1}^{2} y_i \cdot f(y_i) = 0\cdot 0.5 + 1\cdot 0.5 = 0.5 \end{equation}\]

The expectation has the interpretation that if we were to do the experiment a large number of times and calculate the sample mean of the observations, in the long run we would approach the value \(0.5\). Another way to look at the above definition is that the expectation gives us the weighted mean of the possible outcomes, weighted by the respective probabilities of each outcome.

Without getting into the details of how these are derived mathematically (Kerns 2014), we just state here that the mean of \(Y\) (the expectation \(E[Y]\)) and the variance of \(Y\) (written \(Var(Y)\)) of a binomial distribution with parameter \(\theta\) and \(n\) trials are \(E[Y] = n\theta\) and \(Var(Y) = n\theta (1-\theta)\), respectively.

In the binomial example above, \(n\) is a fixed number because we decide on the total number of trials before running the experiment. In the PMF, \(\binom{n}{k} \theta^{k} (1-\theta)^{n-k}\), \(\theta\) is also a fixed value; the only variable in a PMF is \(k\). In real experimental situations, we never know the true point value of \(\theta\). But \(\theta\) can be estimated from the data. From the observed data, we can compute the estimate of \(\theta\), \(\hat \theta=k/n\). The quantity \(\hat \theta\) is the observed proportion of successes, and is called the maximum likelihood estimate of the true (but unknown) parameter \(\theta\).5

What does the term “maximum likelihood estimate” mean? In order to understand this term, it is necessary to first understand what a likelihood function is. Recall that in the discussion above, the PMF \(p(k|n,\theta)\) assumes that \(\theta\) and \(n\) are fixed, and \(k\) will have some value between \(0\) and \(10\) when the experiment is repeated multiple times.

The likelihood function refers to the PMF \(p(k|n,\theta)\), treated as a function of \(\theta\). Once we have observed a particular value for \(k\), this value is now fixed, along with the number of trials \(n\). Once \(k\) and \(n\) are fixed, the function \(p(k|n,\theta)\) only depends on \(\theta\). Thus, the likelihood function is the same function as the PMF, but assumes that the data from the completed experiment is fixed and only the parameter \(\theta\) varies (from 0 to 1). The likelihood function is written \(\mathcal{L}(\theta| k,n)\), or simply \(\mathcal{L}(\theta)\).

For example, suppose that we have \(n=10\) trials, and observe \(k=7\) successes. The likelihood function is:

\[\begin{equation} \mathcal{L}(\theta|k=7,n=10)= \binom{10}{7} \theta^{7} (1-\theta)^{10-7} \end{equation}\]

If we now plot the likelihood function for all possible values of \(\theta\) ranging from \(0\) to \(1\), we get the plot shown in Figure 1.3(a).

FIGURE 1.3: (a) The likelihood function for 7 successes out of 10. (b) The plot shows the estimate of the mean proportion of successes sampled from a binomial distribution with true probability of success 0.7, with increasing sample sizes. As the sample size increases, the estimate converges to the true value of 0.7.

What is important about this plot is that it shows that, given the data, the maximum point is at the point \(0.7\), which corresponds to the estimated mean using the formula shown above: \(k/n = 7/10\). Thus, the maximum likelihood estimate (MLE) gives us the most likely value that the parameter \(\theta\) has, given the data. In the binomial, the proportion of successes \(k/n\) can be shown to be the maximum likelihood estimate of the parameter \(\theta\) (e.g., see p. 339-340 of Miller and Miller 2004).

A crucial point: the “most likely” value of the parameter is with respect to the data at hand. The goal is to estimate an unknown parameter value from the data. This estimated parameter value is chosen such that the probability (discrete case) or probability density (continuous case) of getting the sample values (i.e., the data) is a maximum. This parameter value is the maximum likelihood estimate (MLE).

This MLE from a particular sample of data need not invariably give us an accurate estimate of \(\theta\). For example, if we run our experiment with \(10\) trials and get \(1\) success out of \(10\), the MLE is \(0.10\). We could have just happened to observe only one success out of ten by chance, even if the true \(\theta\) were \(0.7\). If we were to repeatedly run the experiment with increasing sample sizes, as the sample size increases, the MLE would converge to the true value of the parameter. Figure 1.3(b) illustrates this point. The key point here is that with a smaller sample size, the MLE from a particular data set may or may not point to the true value.

1.4.2 What information does a probability distribution provide?

In Bayesian data analysis, we will constantly be asking the question: what information does a probability distribution give us? In particular, we will treat each parameter \(\theta\) as a random variable; this will raise questions like: “what is the probability that the parameter \(\theta\) lies between two values \(a\) and \(b\)”; and “what is the range over which we can be 95% certain that the parameter lies”? In order to be able to answer questions like these, we need to know what information we can obtain once we have decided on a probability distribution that is assumed to have generated the data, and how to extract this information using R. We therefore discuss the different kinds of information we can obtain from a probability distribution. For now we focus only on the binomial random variable introduced above.

1.4.2.1 Compute the probability of a particular outcome (discrete case only)

The binomial distribution shown in Figure 1.2 already shows the probability of each possible event under a different value for \(\theta\). In R, there is a built-in function that allows us to calculate the probability of \(k\) successes out of \(n\), given a particular value of \(k\) (this number constitutes our data), the number of trials \(n\), and given a particular value of \(\theta\); this is the dbinom() function. For example, the probability of 5 successes out of 10 when \(\theta\) is \(0.5\) is (note: we are using \(k\) to represent the number of successes, but the dbinom() function below uses x instead):

dbinom(x = 5, size = 10, prob = 0.5)## [1] 0.246The probabilities of success when \(\theta\) is \(0.1\) or \(0.9\) can be computed by replacing \(0.5\) above by each of these probabilities. One can just do this by giving dbinom() a vector of probabilities:

dbinom(x = 5, size = 10, prob = c(0.1, 0.9))## [1] 0.00149 0.00149The probability of a particular outcome like \(k=5\) successes is only computable in the discrete case. In the continuous case, the probability of obtaining a particular point value will always be zero (we discuss this when we turn to continuous probability distributions below).

1.4.2.2 Compute the cumulative probability of k or less (more) than k successes

Using the dbinom() function, we can compute the cumulative probability of obtaining 1 or less, 2 or less successes, etc. This is done through a simple summation procedure:

## the cumulative probability of obtaining

## 0, 1, or 2 successes out of 10 trials,

## with theta=0.5:

dbinom(x = 0, size = 10, prob = 0.5) +

dbinom(x = 1, size = 10, prob = 0.5) +

dbinom(x = 2, size = 10, prob = 0.5)## [1] 0.0547Mathematically, we could write the above summation as:

\[\begin{equation} \sum_{k=0}^2 \binom{n}{k} \theta^{k} (1-\theta)^{n-k} \end{equation}\]

An alternative to the cumbersome addition in the R code above is this more compact statement, which is identical to the above mathematical expression:

sum(dbinom(0:2, size = 10, prob = 0.5))## [1] 0.0547R has a built-in function called pbinom() that does this summation for us. If we want to know the probability of \(2\) or fewer than \(2\) successes as in the above example, we can write:

pbinom(2, size = 10, prob = 0.5, lower.tail = TRUE)## [1] 0.0547The specification lower.tail = TRUE (the default value) ensures that the summation goes from \(2\) to numbers smaller than \(2\) (which lie in the lower tail of the distribution in Figure 1.2). If we wanted to know what the probability is of obtaining \(3\) or more successes out of \(10\), we can set lower.tail to FALSE:

pbinom(2, size = 10, prob = 0.5, lower.tail = FALSE)## [1] 0.945## equivalently:

## sum(dbinom(3:10,size = 10, prob = 0.5))The cumulative distribution function or CDF for a random variable \(Y\) is thus related to the corresponding probability mass/density function, and is written as follows:

\[\begin{equation} F_Y(a) = P(Y\leq a) \end{equation}\]

The CDF can be plotted by computing the cumulative probabilities for any value \(k\) or less than \(k\), where \(k\) ranges from \(0\) to \(10\) in our running example. The CDF is shown in Figure 1.4(a).

FIGURE 1.4: (a) Cumulative distribution function (CDF) of a binomial distribution with 10 trials and a 50% probability of success. (b) Inverse CDF for the same binomial distribution.

1.4.2.3 Compute the inverse of the cumulative distribution function (the quantile function)

We can also find out the value of the variable \(k\) (the quantile) such that the probability of obtaining \(k\) or less than \(k\) successes is some specific probability value \(p\). If we switch the x and y axes of Figure 1.4(a), we obtain another very useful function, the inverse of the CDF.

The inverse of the CDF (known as the quantile function in R because it returns the quantile, the value \(k\)) is available in R as the function qbinom(). The usage is as follows: to find out what the value \(k\) of the outcome is such that the probability of obtaining \(k\) or less successes is \(0.37\), type:

qbinom(p = 0.37, size = 10, prob = 0.5)## [1] 4One can visualize the inverse CDF of the binomial as in Figure 1.4(b).

1.4.2.4 Generate simulated data from a \(\mathit{Binomial}(n,\theta)\) distribution

We can generate simulated data from a binomial distribution by specifying the number of observations or experiments (n), the number of trials (size), and the probability of success \(\theta\). In R, we do this as follows:

rbinom(n = 1, size = 10, prob = 0.5)## [1] 6The above code generates the number of successes in an experiment with \(10\) trials. Repeatedly run the above code; we will get different numbers of successes each time.

As mentioned earlier, if there is only one trial, then instead of the binomial distribution, we have a Bernoulli distribution. For example, if we have 10 experiments from a Bernoulli distribution, where the probability of success is 0.5, we can simulate data as follows using the function rbern() from the package extraDistr.

rbern(n = 10, prob = 0.5)## [1] 1 0 1 1 1 0 1 0 0 0The above kind of output can also be generated by using the rbinom() function: rbinom(n = 10, size = 1, prob = 0.5).

When the data are generated using the rbinom() function in this way, one can calculate the number of successes by just summing up the vector, or computing its mean and multiplying by the number of trials, here \(10\):

(y <- rbinom(n = 10, size = 1, prob = 0.5))## [1] 0 1 1 1 0 0 0 0 0 1mean(y) * 10## [1] 4sum(y)## [1] 41.5 Continuous random variables: An example using the normal distribution

We will now revisit the idea of the random variable using a continuous distribution. Imagine that we have a vector of reading time data \(y\) measured in milliseconds and coming from a normal distribution. The normal distribution is defined in terms of two parameters: the location, its mean value \(\mu\), which determines its center, and the scale, its standard deviation, \(\sigma\), which determines how much spread there is around this center point.

The probability density function (PDF) of the normal distribution is defined as follows:

\[\begin{equation} \mathit{Normal}(y|\mu,\sigma)=f(y)= \frac{1}{\sqrt{2\pi \sigma^2}} \exp \left(-\frac{(y-\mu)^2}{2\sigma^2} \right) \end{equation}\]

Here, \(\mu\) is some true, unknown mean, and \(\sigma^2\) is some true, unknown variance of the normal distribution that the reading times have been sampled from. There is a built-in function in R that computes the above function once we specify the mean \(\mu\) and the standard deviation \(\sigma\) (in R, this parameter is specified in terms of the standard deviation rather than the variance).

Figure 1.5 visualizes the normal distribution for particular values of \(\mu\) and \(\sigma\), as a PDF (using dnorm()), a CDF (using pnorm()), and the inverse CDF (using qnorm()). It should be clear from the figure that these are three different ways of looking at the same information.

FIGURE 1.5: The PDF, CDF, and inverse CDF for the \(\mathit{Normal}(\mu=500,\sigma=100)\).

As in the discrete example, the PDF, CDF, and inverse of the CDF allow us to ask questions like:

- What is the probability of observing values between \(a\) and \(b\) from a normal distribution with mean \(\mu\) and standard deviation \(\sigma\)? Using the above example, we can ask what the probability is of observing values between \(200\) and \(700\) ms:

pnorm(700, mean = 500, sd = 100) - pnorm(200, mean = 500, sd = 100)## [1] 0.976The probability that the random variable takes any specific point value is zero. This is because the probability in a continuous probability distribution is the area under the curve, and the area at any point on the x-axis is always zero. The implication here is that it is only meaningful to ask about probabilities between two different point values; e.g., the probability that \(Y\) lies between \(a\) and \(b\), or \(P(a<Y<b)\). Notice that \(P(a<Y<b)\) is the same statement as \(P(a\leq Y\leq b)\). Of course, for any particular point value, the PDF itself does not return the value zero; but the value returned by the PDF is not the probability of that particular value occurring. It is the density of that particular value; and if the PDF is seen as a function of the parameters, it is the likelihood of that particular value.

- What is the quantile \(q\) such that the probability is \(p\) of observing that value \(q\) or a value more extreme than \(q\)? For example, we can work out the quantile \(q\) such that the probability of observing \(q\) or some value less than it is \(0.975\), in the \(\mathit{Normal}(500,100)\) distribution. Formally, we would write this as \(P(Y<a)\).

qnorm(0.975, mean = 500, sd = 100)## [1] 696The above output says that the quantile value \(q\) such that \(Prob(X<q)=0.975\) is \(q=696\).

- Generate simulated data. Given a vector of \(n\) independent and identically distributed data \(y\), i.e., given that each data point is being generated independently from \(Y \sim \mathit{Normal}(\mu,\sigma)\) for some values of the parameters, the sample mean and standard deviation6 are:

\[\begin{equation} \bar{y} = \frac{\sum_{i=1}^n y_i}{n} \end{equation}\]

\[\begin{equation} sd(y) = \sqrt{\frac{\sum_{i=1}^n (y_i- \bar{y})^2}{n}} \end{equation}\]

For example, we can generate \(10\) data points using the rnorm() function, and then use the simulated data to compute the mean and standard deviation:

y <- rnorm(10, mean = 500, sd = 100)

mean(y)## [1] 513sd(y)## [1] 92Again, the sample mean and sample standard deviation computed from a particular (simulated or real) data set need not necessarily be close to the true values of the respective parameters. Especially when sample size is small, one can end up with mis-estimates of the mean and standard deviation.

Incidentally, simulated data can be used to generate all kinds of statistics. For example, we can compute the lower and upper quantiles such that 95% of the simulated data are contained within these quantiles:

quantile(y, probs = c(0.025, 0.975))## 2.5% 97.5%

## 356 640Later on, this function will be used to generate summary statistics once we have obtained samples of a parameter after we have fit a model using Stan/brms.

1.5.1 An important distinction: probability vs. density in a continuous random variable

In continuous distributions like the normal discussed above, it is important to understand that the probability density function or PDF, \(p(y| \mu, \sigma)\) defines a mapping from the \(y\) values (the possible values that the data can have) to a quantity called the density of each possible value. We can see this function in action when we use dnorm() to compute, say, the density value corresponding to \(y=1\) and \(y=2\) in the standard normal distribution, that is, a normal distribution with a mean of zero and a standard deviation of one.

## density:

dnorm(1, mean = 0, sd = 1)## [1] 0.242dnorm(2, mean = 0, sd = 1)## [1] 0.054If the density at a particular point value like \(1\) is high compared to some other value (\(2\) in the above example) then this point value \(1\) has a higher likelihood than \(2\) in the standard normal distribution.

The quantity computed for the values \(1\) and \(2\) are not the probability of observing \(1\) or \(2\) in this distribution. As mentioned earlier, probability in a continuous distribution is defined as the area under the curve, and this area will always be zero at any point value (because there are infinitely many different possible values). If we want to know the probability of obtaining values between an upper and lower bound \(b\) and \(a\), i.e., \(P(a<Y<b)\) where these are two distinct values, we must use the cumulative distribution function or CDF: in R, for the normal distribution, this is the pnorm() function. For example, the probability of observing a value between \(+2\) and \(-2\) in a normal distribution with mean \(0\) and standard deviation \(1\) is:

pnorm(2, mean = 0, sd = 1) - pnorm(-2, mean = 0, sd = 1)## [1] 0.954The situation is different in discrete random variables. These have a probability mass function (PMF) associated with them—an example is the binomial distribution that we saw earlier. There, the PMF maps the possible \(y\) values to the probabilities of those values occurring. That is why, in the binomial distribution, the probability of observing exactly \(2\) successes when sampling from a \(\mathit{Binomial}(n=10,\theta=0.5)\) can be computed using either dbinom() or pbinom():

dbinom(2, size = 10, prob = 0.5)## [1] 0.0439pbinom(2, size = 10, prob = 0.5) - pbinom(1, size = 10, prob = 0.5)## [1] 0.0439In the second line of code above, we are computing the cumulative probability of observing two or less successes, minus the probability of observing one or less successes. This gives us the probability of observing exactly two successes. The dbinom() gives us this same information.

1.5.2 Truncating a normal distribution

In the above discussion, the support for the normal distribution ranges from minus infinity to plus infinity. One can define PDFs with a more limited support; an example would be a normal distribution whose PDF \(f(x)\) is such that the lower bound is truncated at \(0\) to allow only positive values. In such a case, the area under the range minus infinity to zero (\(\int_{-\infty}^0 f(x) \, \mathrm{d}x\)) will be \(0\) because the range lies outside the support of the truncated normal distribution. Also, if one truncates the standard normal (\(\mathit{Normal}(0,1)\)) at \(0\), in order to make the area between zero and plus infinity sum up to \(1\), we would have to multiply it by \(2\), because we just halved the area under the curve. More formally and more generally, we would have to multiply the truncated distribution \(f(x)\) by some factor \(k\) such that the following integral sums to \(1\):

\[\begin{equation} k \int_{0}^{\infty} f(x)\, \mathrm{d}x = 1 \tag{1.1} \end{equation}\]

Clearly, this factor is \(k = \frac{1}{\int_{0}^{\infty} f(x)\, \mathrm{d}x}\). For the standard normal, this integral is easy to compute using R; we just calculate the complement of the cumulative distribution (CCDF):

pnorm(0, mean = 0, sd = 1, lower.tail = FALSE)## [1] 0.5## alternatively:

1 - pnorm(0, mean = 0, sd = 1, lower.tail = TRUE)## [1] 0.5The above calculation implies that \(k\) is indeed \(2\), as we informally argued (\(k = \frac{1}{0.5}=2\)).

Also, if we had truncated the distribution at 0 to the right instead of the left (allowing only negative values), we would have to find the factor \(k\) in the same way as above, except that we would have to find \(k\) such that:

\[\begin{equation} k \int_{-\infty}^{0} f(x)\, \mathrm{d}x = 1 \end{equation}\]

For the standard normal case, in R, this factor would require us to use the CDF:

pnorm(0, mean = 0, sd = 1, lower.tail = TRUE)## [1] 0.5Later in this book, we will be using such truncated distributions when doing Bayesian modeling, and when we use them, we will want to multiply the truncated distribution by the factor \(k\) to ensure that it is still a proper PDF whose area under the curve sums to \(1\). Truncated normal distributions are discussed in more detail in the online section A.2.

1.6 Bivariate and multivariate distributions

So far, we have only discussed univariate distributions; these are distributions that involve only one variable. For example, when we talk about data generated from a Binomial distribution, or from a normal distribution, these are univariate distributions.

It is also possible to specify distributions with two or more dimensions. Some examples will make it clear what a bivariate (or more generally, multivariate) distribution is.

1.6.1 Example 1: Discrete bivariate distributions

Starting with the discrete case, consider the discrete bivariate distribution shown below. These are data from an experiment where, inter alia, in each trial a Likert acceptability rating and a question-response accuracy were recorded (the data are from a study by Laurinavichyute 2020, used with permission here). Load the data by loading the R package bcogsci.

data("df_discreteagrmt")Figure 1.6 shows the joint probability mass function of two random variables X and Y. The random variable \(X\) consists of \(7\) possible values (this is the \(1-7\) Likert response scale), and the random variable \(Y\) is question-response accuracy, with \(0\) representing an incorrect response, and \(1\) representing a correct response. One can also display Figure 1.6 as a table; see Table 1.3.

FIGURE 1.6: Example of a discrete bivariate distribution. In these data, in every trial, two pieces of information were collected: Likert responses and yes-no question responses. The random variable X represents Likert scale responses on a scale of 1-7. and the random variable Y represents 0, 1 (incorrect, correct) responses to comprehension questions.

| \(x=1\) | \(x=2\) | \(x=3\) | \(x=4\) | \(x=5\) | \(x=6\) | \(x=7\) | |

|---|---|---|---|---|---|---|---|

| \(y=0\) | \(0.018\) | \(0.023\) | \(0.04\) | \(0.043\) | \(0.063\) | \(0.049\) | \(0.055\) |

| \(y=1\) | \(0.031\) | \(0.053\) | \(0.086\) | \(0.096\) | \(0.147\) | \(0.153\) | \(0.142\) |

For each possible pair of values of \(X\) and \(Y\), we have a joint probability \(p_{X,Y}(x,y)\). Given such a bivariate distribution, there are two useful quantities we can compute: the marginal distributions (\(p_{X}\) and \(p_Y\)), and the conditional distributions (\(p_{X|Y}\) and \(p_{Y|X}\)). Table 1.3 shows the joint probability mass function \(p_{X,Y}(x,y)\).

1.6.1.1 Marginal distributions

The marginal distribution \(p_Y\) is defined as follows. \(S_{X}\) is the support of \(X\), i.e., all the possible values of \(X\).

\[\begin{equation} p_{Y}(y)=\sum_{x\in S_{X}}p_{X,Y}(x,y).\label{eq-marginal-pmf} \end{equation}\]

Similarly, the marginal distribution \(p_X\) is defined as:

\[\begin{equation} p_{X}(x)=\sum_{y\in S_{Y}}p_{X,Y}(x,y).\label{eq-marginal-pmf2} \end{equation}\]

\(p_Y\) is computed, by summing up the rows; and \(p_X\) by summing up the columns. We can see why this is called the marginal distribution; the result appears in the margins of the table. In the code below, the object probs contains bivariate PMF shown in Table 1.3.

# P(Y)

(PY <- rowSums(probs))## y=0 y=1

## 0.291 0.709sum(PY) ## sums to 1## [1] 1# P(X)

(PX <- colSums(probs))## x=1 x=2 x=3 x=4 x=5 x=6 x=7

## 0.0491 0.0766 0.1257 0.1394 0.2102 0.2020 0.1969sum(PX) ## sums to 1## [1] 1The marginal probabilities sum to \(1\), as they should. Table 1.4 shows the marginal probabilities.

| \(x=1\) | \(x=2\) | \(x=3\) | \(x=4\) | \(x=5\) | \(x=6\) | \(x=7\) | \(P(Y)\) | |

|---|---|---|---|---|---|---|---|---|

| \(y=0\) | \(0.018\) | \(0.023\) | \(0.04\) | \(0.043\) | \(0.063\) | \(0.049\) | \(0.055\) | \(0.291\) |

| \(y=1\) | \(0.031\) | \(0.053\) | \(0.086\) | \(0.096\) | \(0.147\) | \(0.153\) | \(0.142\) | \(0.709\) |

| \(P(X)\) | \(0.049\) | \(0.077\) | \(0.126\) | \(0.139\) | \(0.21\) | \(0.202\) | \(0.197\) |

To compute the marginal distribution of \(X\), one is summing over all the \(Y\)’s; and to compute the marginal distribution of \(Y\), one sums over all the \(X\)’s. We say that we are marginalizing out the random variable that we are summing over. One can also visualize the two marginal distributions using barplots (Figure 1.7).

FIGURE 1.7: The marginal distributions of the random variables X and Y, presented as barplots.

1.6.1.2 Conditional distributions

For computing conditional distributions, recall that conditional probability (see section 1.2) is defined as:

\[\begin{equation} p_{X\mid Y}(x\mid y) = \frac{p_{X,Y}(x,y)}{p_Y(y)} \end{equation}\]

and

\[\begin{equation} p_{Y\mid X}(y\mid x) = \frac{p_{X,Y}(x,y)}{p_X(x)} \end{equation}\]

The conditional distribution of a random variable \(X\) given that \(Y=y\), where \(y\) is some specific (fixed) value, is:

\[\begin{equation} p_{X\mid Y} (x\mid y) = \frac{p_{X,Y}(x,y)}{p_Y(y)} \quad \hbox{provided } p_Y(y)=P(Y=y)>0 \end{equation}\]

As an example, let’s consider how \(p_{X\mid Y}\) would be computed. The possible values of \(y\) are \(0,1\), and so we have to find the conditional distribution (defined above) for each of these values. That is, we have to find \(p_{X\mid Y}(x\mid y=0)\), and \(p_{X\mid Y}(x\mid y=1)\).

Let’s do the calculation for \(p_{X\mid Y}(x\mid y=0)\).

\[\begin{equation} \begin{split} p_{X\mid Y} (1\mid 0) =& \frac{p_{X,Y}(1,0)}{p_Y(0)}\\ =& \frac{0.018}{0.291}\\ =& 0.062 \end{split} \end{equation}\]

This conditional probability value will occupy the cell \(X=1\), \(Y=0\) in Table 1.5 summarizing the conditional probability distribution \(p_{X|Y}\). In this way, one can fill in the entire table, which will then represent the conditional distributions \(p_{X|Y=0}\) and \(p_{X|Y=1}\). The reader may want to take a few minutes to complete Table 1.5. After the conditional probabilities have been computed, the rows should sum up to \(1\).

| \(x=1\) | \(x=2\) | \(x=3\) | \(x=4\) | \(x=5\) | \(x=6\) | \(x=7\) | |

|---|---|---|---|---|---|---|---|

| \(p_{X|Y(x|y=0)}\) | \(0.062\) | ||||||

| \(p_{X|Y(x|y=1)}\) |

Similarly, one can construct a table that shows \(p_{Y|X}\).

1.6.1.3 Covariance and correlation

Here, we briefly define the covariance and correlation of two discrete random variables. For detailed examples and discussion, see the references at the end of the chapter. Informally, if there is a high probability that large values of a random variable \(X\) are associated with large values of another random variable \(Y\), we will say that the covariance between the two random variable \(X\) and \(Y\), written \(Cov(X,Y)\), is positive.

The covariance of two (discrete) random variables \(X\) and \(Y\) is defined as follows. \(E[\cdot]\) refers to the expectation of a random variable.

\[\begin{equation} Cov(X,Y) = E[(X - E[X])(Y-E[Y])] \end{equation}\]

It is possible to show that this is equivalent to:

\[\begin{equation} Cov(X,Y) = E[XY] - E[X]E[Y] \end{equation}\]

The expectation E[XY] is defined to be:

\[\begin{equation} E[XY]=\sum_x \sum_y xy f_{X,Y}(x,y) \end{equation}\]

If the standard deviations of the two random variables is \(\sigma_X\) and \(\sigma_Y\), the correlation between the two random variables, \(\rho_{XY}\), is defined as:

\[\begin{equation} \rho_{XY} = \frac{Cov(X,Y)}{\sigma_X\sigma_Y} \end{equation}\]

1.6.2 Example 2: Continuous bivariate distributions

Consider now the continuous bivariate case; this time, we will use simulated data. Consider two normal random variables \(X\) and \(Y\), each of which coming from, for example, a \(\mathit{Normal}(0,1)\) distribution, with some correlation \(\rho_{X,Y}\) between the two random variables.

A bivariate distribution for two random variables \(X\) and \(Y\), each of which comes from a normal distribution, is expressed in terms of the means and standard deviations of each of the two distributions, and the correlation \(\rho_{XY}\) between them. The standard deviations and correlation in a bivariate distribution are expressed in a special form of a \(2\times 2\) matrix called a variance-covariance matrix \(\Sigma\). If \(\rho_{XY}\) is the correlation between the two random variables, and \(\sigma _{X}\) and \(\sigma _{Y}\) the respective standard deviations, the variance-covariance matrix is written as:

\[\begin{equation}\label{eq:covmatfoundations} \Sigma = \begin{pmatrix} \sigma _{X}^2 & \rho_{XY}\sigma _{X}\sigma _{Y}\\ \rho_{XY}\sigma _{X}\sigma _{Y} & \sigma _{Y}^2\\ \end{pmatrix} \end{equation}\]

The off-diagonals of this matrix contain the covariance between \(X\) and \(Y\).

The joint distribution of \(X\) and \(Y\) is defined as follows:

\[\begin{equation}\label{eq:jointpriordistfoundations} \begin{pmatrix} X \\ Y \\ \end{pmatrix} \sim \mathcal{N}_2 \left( \begin{pmatrix} 0 \\ 0 \\ \end{pmatrix}, \Sigma \right) \end{equation}\]

The joint PDF is written with reference to the two variables \(f_{X,Y}(x,y)\). It has the property that the volume under the surface that is bounded by the density function sums to 1—this sum-to-1 property is the same idea that we encountered in the univariate cases (the normal and binomial distributions), except that we are talking about a bivariate distribution here, so we talk about the volume under the surface rather than the area under the curve.

Formally, we would write the volume as a double integral: we are summing up the volume under the surface representing the joint density for \(X\) and \(Y\) (hence the two integrals).

\[\begin{equation} \iint_{S_{X,Y}} f_{X,Y}(x,y)\, \mathrm{d}x \mathrm{d}y = 1 \end{equation}\]

Here, the terms \(\mathrm{d}x\) and \(\mathrm{d}y\) express the fact that we are summing up the volume under the surface.

The joint CDF would be written as follows. The equation below gives us the probability of observing a value like \((u,v)\) or some value smaller than that (i.e., some \((u',v')\), such that \(u'<u\) and \(v'<v\)).

\[\begin{equation} \begin{split} F_{X,Y}(u,v) =& P(X<u,Y<v)\\ =& \int_{-\infty}^u \int_{-\infty}^v f_{X,Y}(x,y)\, \mathrm{d}y \mathrm{d}x\\ \end{split} \end{equation}\]

Just as in the discrete case, the marginal distributions can be derived by marginalizing out the other random variable:

\[\begin{equation} f_X(x) = \int_{S_Y} f_{X,Y}(x,y)\, \mathrm{d}y, \quad f_Y(y) = \int_{S_X} f_{X,Y}(x,y)\, \mathrm{d}x \end{equation}\]

Here, \(S_X\) and \(S_Y\) are the respective supports.

Here, the integral sign \(\int\) is the continuous equivalent of the summation sign \(\sum\) in the discrete case. Luckily, we will never have to compute such integrals ourselves; but it is important to appreciate how a marginal distribution arises from a bivariate distribution—by integrating out or marginalizing out the other random variable.

A visualization will help. The figures below show a bivariate distribution with zero correlation (Figure 1.8), a negative (Figure 1.9) and a positive correlation (Figure 1.10).

FIGURE 1.8: A bivariate normal distribution with zero correlation. Shown are four plots: the top-right plot shows the three-dimensional bivariate density, the top-left plot the contour plot of the distribution (seen from above). The lower plots show the cumulative distribution function from two views, as a three-dimensional plot and as a contour plot.

FIGURE 1.9: A bivariate normal distribution with a negative correlation of -0.6. Shown are four plots: the top-right plot shows the three-dimensional bivariate density, the top-left plot the contour plot of the distribution (seen from above). The lower plots show the cumulative distribution function from two views, as a three-dimensional plot and as a contour plot.

FIGURE 1.10: A bivariate normal distribution with a positive correlation of 0.6. Shown are four plots: the top-right plot shows the three-dimensional bivariate density, the top-left plot the contour plot of the distribution (seen from above). The lower plots show the cumulative distribution function from two views, as a three-dimensional plot and as a contour plot.

In this book, we will make use of such multivariate distributions a lot, and it will soon become important to know how to generate simulated bivariate or multivariate data that is correlated. So let’s look at that next.

1.6.3 Generate simulated bivariate (or multivariate) data

Suppose we want to generate \(100\) pairs of correlated continuous data, with correlation \(\rho=0.6\). The two random variables both have a normal PDF, and have mean \(0\), and standard deviations \(5\) and \(10\), respectively.

Here is how we would generate such data. First, define a variance-covariance matrix; then, use the multivariate analog of the rnorm() function, mvrnorm(), from the MASS package to generate \(100\) data points.

## define a variance-covariance matrix:

Sigma <- matrix(c(5 ^ 2, 5 * 10 * 0.6, 5 * 10 * 0.6, 10 ^ 2),

byrow = FALSE, ncol = 2)

## generate data:

u <- mvrnorm(n = 100, mu = c(0, 0), Sigma = Sigma)

head(u, n = 3)## [,1] [,2]

## [1,] -2.50 -14.5

## [2,] 6.71 13.1

## [3,] 10.66 24.7Figure 1.11 confirms that the simulated data are positively correlated.

FIGURE 1.11: The relationship between two positively correlated random variables, generated by simulating data using the R function mvrnorm from the MASS library.

1.6.4 Decomposing a variance-covariance matrix

One final useful fact about the variance-covariance matrix—one that we will need later—is that it can be decomposed into the component standard deviations and an underlying correlation matrix. For example, consider the matrix above:

Sigma## [,1] [,2]

## [1,] 25 30

## [2,] 30 100One can decompose the matrix as follows. The matrix can be seen as the product of a diagonal matrix of the standard deviations and the correlation matrix:

\[\begin{equation} \begin{pmatrix} 5 & 0\\ 0 & 10\\ \end{pmatrix} \begin{pmatrix} 1.0 & 0.6\\ 0.6 & 1.0\\ \end{pmatrix} \begin{pmatrix} 5 & 0\\ 0 & 10\\ \end{pmatrix} \end{equation}\]

One can reassemble the variance-covariance matrix by pre-multiplying and post-multiplying the correlation matrix with the diagonal matrix containing the standard deviations:7

\[\begin{equation} \begin{pmatrix} 5 & 0\\ 0 & 10\\ \end{pmatrix} \begin{pmatrix} 1.0 & 0.6\\ 0.6 & 1.0\\ \end{pmatrix} \begin{pmatrix} 5 & 0\\ 0 & 10\\ \end{pmatrix} = \begin{pmatrix} 25 & 30\\ 30 & 100\\ \end{pmatrix} \end{equation}\]

Using R (the symbol %*% is the matrix multiplication operator in R):

## sds:

(sds <- c(5, 10))## [1] 5 10## diagonal matrix:

(sd_diag <- diag(sds))## [,1] [,2]

## [1,] 5 0

## [2,] 0 10## correlation matrix:

(corrmatrix <- matrix(c(1, 0.6, 0.6, 1), ncol = 2))## [,1] [,2]

## [1,] 1.0 0.6

## [2,] 0.6 1.0sd_diag %*% corrmatrix %*% sd_diag## [,1] [,2]

## [1,] 25 30

## [2,] 30 100This decomposition and reassembly of the variance-covariance matrix will become important when we start building hierarchical models in Stan.

1.7 An important concept: The marginal likelihood (integrating out a parameter)

Here, we introduce a concept that will turn up many times in this book. The concept we unpack here is called “integrating out a parameter.” We will need this when we encounter Bayes’ rule in the next chapter, and when we use Bayes factors later in the book (chapter 13).

Integrating out a parameter refers to the following situation. The example used here discusses the binomial distribution, but the approach is generally applicable for any distribution.

Suppose we have a binomial random variable \(Y\) with PMF \(p(Y)\). Suppose also that this PMF is defined in terms of parameter \(\theta\) that can have only three possible values, \(0.1, 0.5, 0.9\), each with equal probability. In other words, the probability that \(\theta\) is \(0.1, 0.5,\) or \(0.9\) is \(1/3\) each.

We stick with our earlier example of \(n=10\) trials and \(k=7\) successes. The likelihood function then is

\[\begin{equation} p(k=7,n=10|\theta) = \binom{10}{7} \theta^7 (1-\theta)^{3} \end{equation}\]

There is a related concept of marginal likelihood, which we can write here as \(p(k=7,n=10)\). Marginal likelihood is the likelihood computed by “marginalizing” out the parameter \(\theta\): for each possible value that the parameter \(\theta\) can have, we compute the likelihood at that value and multiply that likelihood with the probability/density of that \(\theta\) value occurring. Then we sum up each of the products computed in this way. Mathematically, this means that we carry out the following operation.

In our example, there are three possible values of \(\theta\), call them \(\theta_1=0.1\), \(\theta_2=0.5\), and \(\theta_3=0.9\). Each has probability \(1/3\); so \(p(\theta_1)=p(\theta_2)=p(\theta_3)=1/3\). Given this information, we can compute the marginal likelihood as follows:

\[\begin{equation} \begin{split} p(k=7,n=10) =& \binom{10}{7} \theta_1^7 (1-\theta_1)^{3} \times p(\theta_1) \\ +& \binom{10}{7} \theta_2^7 (1-\theta_2)^{3}\times p(\theta_2) \\ +& \binom{10}{7} \theta_3^7 (1-\theta_3)^{3}\times p(\theta_3) \end{split} \end{equation}\]

Writing the \(\theta\) values and their probabilities, we get:

\[\begin{equation} \begin{split} p(k=7,n=10) =& \binom{10}{7} 0.1^7 (1-0.1)^{3} \times \frac{1}{3} \\ +& \binom{10}{7} 0.5^7 (1-0.5)^{3}\times \frac{1}{3} \\ +& \binom{10}{7} 0.9^7 (1-0.9)^{3}\times \frac{1}{3} \end{split} \end{equation}\]

Taking the common factors (\(\frac{1}{3}\) and \(\binom{10}{7}\)) out:

\[\begin{equation} \begin{split} p(k=7,n=10) =& \frac{1}{3} \binom{10}{7} \big[ 0.1^7 (1-0.1)^{3} \\ +& 0.5^7 (1-0.5)^{3} \\ +& 0.9^7 (1-0.9)^{3} \big] \\ =& 0.058 \end{split} \end{equation}\]

Thus, a marginal likelihood is a kind of weighted sum of the likelihood, weighted by the possible values of the parameter.8

The above example was contrived, because we stated that the parameter \(\theta\) has only three possible discrete values. Now consider the case where the parameter \(\theta\) can have all possible values between \(0\) and \(1\); every possible value is equally likely. Formally, what this means is that the possible values of \(\theta\) can be described in terms of the uniform distribution with lower bound \(0\) and upper bound \(1\) (for more details on this distribution, see section 5.2 in Blitzstein and Hwang 2014). This is a continuous distribution, and because the area under the distribution has to sum to \(1\), the density of every possible value of \(\theta\) is \(1\).

In this example, the summation now has to be done over a continuous space \([0,1]\). The way this summation is expressed in mathematics is through the integral symbol:

\[\begin{equation} p(k=7,n=10) = \int_0^1 \binom{10}{7} \theta^7 (1-\theta)^{3}\, p(\theta) d\theta \end{equation}\]

Because \(p(\theta)=1\) for all \(\theta\) (in this particular case), we can just write:

\[\begin{equation} p(k=7,n=10) = \int_0^1 \binom{10}{7} \theta^7 (1-\theta)^{3}\, d\theta \end{equation}\]

This statement is computing something similar to what we computed above with the three discrete parameter values, except that the summation is being done over a continuous space ranging from 0 to 1. We say that the parameter \(\theta\) has been integrated out, or marginalized. Integrating out a parameter will be a very common operation in this book, but we will never have to do the calculation ourselves. For the above case, we can compute the integral in R:

BinLik <- function(theta) {

choose(10, 7) * theta^7 * (1 - theta)^3

}

integrate(BinLik, lower = 0, upper = 1)$value## [1] 0.0909The value that is output by the integrate() function above is the marginal likelihood.

This completes our discussion of random variables and probability distributions. Next, we summarize what we have learned so far about univariate distributions.

1.8 Summary of some useful R functions

Table 1.6 summarizes the different functions relating to PMFs and PDFs, using the binomial and normal as examples.

| Discrete | Continuous | |

|---|---|---|

| Example: | \(\mathit{Binomial}(y|n,\theta)\) | \(\mathit{Norma}l(y|\mu,\sigma)\) |

| Likelihood function | dbinom() |

dnorm() |

| Prob Y=y | dbinom() |

always 0 |

| Prob \(Y\geq y, Y\leq y, y_1<Y<y_2\) | pbinom() |

pnorm() |

| Inverse CDF | qbinom() |

qnorm() |

| Generate simulated data | rbinom() |

rnorm() |

Later on, we will use other distributions, such as the uniform, beta, etc., and each of these has their own set of d-p-q-r functions in R. One can look up these different distributions in, for example, Blitzstein and Hwang (2014).

1.9 Summary

This chapter briefly reviewed some very basic concepts in probability theory, univariate discrete and continuous random variables, and bivariate distributions. An important set of functions we encountered are the d-p-q-r family of functions for different distributions; these are very useful for understanding the properties of commonly used distributions, visualizing distributions, and for simulating data. Distributions will play a central role in this book; for example, knowing how to visualize distributions will be important for deciding on prior distributions for parameters. Other important ideas we learned about were marginal and conditional probability, marginal likelihood, and how to define multivariate distributions; these concepts will play an important role in Bayesian statistics.

1.10 Further reading

An informal but useful discussion about probability appears in Spiegelhalter (2024). A quick review of the mathematical foundations needed for statistics is available in the short book by Fox (2009), as well as Gill (2006). A more comprehensive introduction to the mathematical background needed for advanced Bayesian modeling is Jordan and Smith (2008). Morin (2016) and Blitzstein and Hwang (2014) are accessible introductions to probability theory. Ross (2002) offers a more advanced treatment which discusses random variable theory and illustrates applications of probability theory. Some basic results about random variables are also discussed in the above-mentioned textbooks (for example, the expectation and variance of sums of random variables); some of these results will be needed in later chapters of the present book. A good formal introduction to mathematical statistics (covering classical frequentist theory) is Miller and Miller (2004). The freely available book by Kerns (2014) introduces frequentist and Bayesian statistics from the ground up in a very comprehensive and systematic manner; the source code for the book is available from https://github.com/gjkerns/IPSUR. The open-access book, Probability and Statistics: a simulation-based introduction, by Bob Carpenter is also worth studying: https://github.com/bob-carpenter/prob-stats. A thorough introduction to the matrix algebra needed for statistics, with examples using R, is provided in Fieller (2016). Commonly used probability distributions are presented in detail in Miller and Miller (2004), Blitzstein and Hwang (2014), and Ross (2002). A useful reference for continuous univariate distributions is Johnson, Kotz, and Balakrishnan (1995).

References

Blitzstein, Joseph K., and Jessica Hwang. 2014. Introduction to Probability. Chapman; Hall/CRC.

Fieller, Nick. 2016. Basics of Matrix Algebra for Statistics with R. Boca Raton, FL: CRC Press.

Fox, John. 2009. A Mathematical Primer for Social Statistics. Vol. 159. Sage.

Gill, Jeff. 2006. Essential Mathematics for Political and Social Research. Cambridge University Press Cambridge.

Johnson, Norman L., Samuel Kotz, and Narayanaswamy Balakrishnan. 1995. Continuous Univariate Distributions, Volume 2. Vol. 289. John Wiley; Sons.

Jordan, Dominic, and Peter Smith. 2008. Mathematical Techniques: An Introduction for the Engineering, Physical, and Mathematical Sciences. Oxford University Press, Oxford.

Kerns, G. J. 2014. Introduction to Probability and Statistics Using R. Second Edition.

Kolmogorov, Andreı̆ Nikolaevich. 1933. Foundations of the Theory of Probability: Second English Edition. Courier Dover Publications.

Laurinavichyute, Anna. 2020. “Similarity-Based Interference and Faulty Encoding Accounts of Sentence Processing.” Dissertation, University of Potsdam. https://publishup.uni-potsdam.de/frontdoor/index/index/docId/50966.

Miller, I., and M. Miller. 2004. John E. Freund’s Mathematical Statistics with Applications. Upper Saddle River, NJ: Prentice Hall.

Morin, David J. 2016. Probability: For the Enthusiastic Beginner. Createspace Independent Publishing Platform.

Resnick, Sidney. 2019. A Probability Path. Springer.

Ross, Sheldon. 2002. A First Course in Probability. Pearson Education.

Spiegelhalter, David J. 2024. “Why Probability Probably Doesn’t Exist (but It Is Useful to Act Like It Does).” Nature 636 (8043): 560–63.

Steyer, Rolf, and Werner Nagel. 2017. Probability and Conditional Expectation: Fundamentals for the Empirical Sciences. Vol. 5. John Wiley & Sons.

When the sample space \(\Omega\) is finite, any subset of \(\Omega\) can be an event; in this case, the event space \(F\) is the collection of all possible subsets of \(\Omega\). In set theory, a set of all the subsets of another set is called a power set, and the number of subsets of any set with \(n\) elements is \(2^n\). For example, for a standard six-sided die, the sample space is the set \(\Omega=\{1,2,3,4,5,6\}\) and the event space \(F\) will contain \(2^6 = 64\) sets: the empty set, six one-element sets, \(15\) two-element sets, \(20\) three-element sets, …, and one six-element set. Here are three assumptions we will always make about any event space: (a) Both the empty set and universal set (\(\Omega\)) belong to the event space \(F\); (b) if \(E\) is an event, then so is the complement of \(E\); and (c) for any list of events \(A_1, A_2,...\) (finite or infinite), the phrase “\(A_1\) or \(A_2\) or …” describes another event.↩︎

Here, we use \(Y\), but we could have used any letter, such as \(X, Z,...\). Later on, in some situations we will use Greek letters like \(\theta, \mu, \sigma\) to represent a random variable.↩︎

The actual formal definition of random variable is more complex, and it is based on measure theory. A more rigorous definition can be found in, for example, Steyer and Nagel (2017) and Resnick (2019).↩︎

A notational aside: In frequentist treatments, the PMF would usually be written \(p(y;\theta)\), i.e., with a semi-colon rather than the conditional distribution marked by the vertical bar. The semi-colon is intended to indicate that in the frequentist paradigm, the parameters are fixed point values; by contrast, in the Bayesian paradigm, parameters are random variables. This has the consequence that for the Bayesian, the distribution of \(y\), \(p(y)\) is really a conditional distribution, conditional on a random variable, here \(\theta\). For the frequentist, \(p(y)\) requires some point value for \(\theta\), but it cannot be a conditional distribution because \(\theta\) is not a random variable. We define conditional distributions later in this section.↩︎

Looking ahead to the rest of the book, in the Bayesian approach, the parameter of interest, \(\theta\) in the present example, never has just a true unknown point value associated with it; instead, we use a probability distribution to express our belief about plausible values of the parameter. However, throughout this book, when generating simulated data, we will often use point values for parameters. These point values are adopted simply to evaluate the behavior of the model being investigated.↩︎

R will compute the standard deviation by dividing by \(n-1\), not \(n\); this is because dividing by \(n\) gives a biased estimate (chapter 10 of Miller and Miller 2004). This is not an important detail for our purposes, and in any case for large \(n\) it doesn’t really matter whether one divides by \(n\) or \(n-1\).↩︎

There is a built-in convenience function,

sdcor2covin theSINpackage that does this calculation, taking the vector of standard deviations (not the diagonal matrix) and the correlation matrix to yield the variance-covariance matrix:sdcor2cov(stddev = sds, corr = corrmatrix).↩︎Where does the above formula come from? It falls out from the law of total probability discussed above.↩︎