Chapter 5 Bayesian hierarchical models

Usually, experimental data in cognitive science contain “clusters.” These are natural groups that contain observations that are more similar within the clusters than between them. The most common examples of clusters in experimental designs are subjects and experimental items (e.g., words, pictures, objects that are presented to the subjects). These clusters arise because we have multiple (repeated) observations for each subject, and for each item. Such data are sometimes also called dependent data, because the observations within a cluster are not independent. If we want to incorporate this grouping structure in our analysis, we generally use a hierarchical model (also called multi-level or mixed models, Pinheiro and Bates 2000). This kind of clustering and hierarchical modeling arises as a consequence of the idea of exchangeability.

5.1 Exchangeability and hierarchical models

Exchangeability is the Bayesian analog of the phrase “independent and identically distributed” that appears regularly in classical (i.e., frequentist) statistics. Some connections and differences between exchangeability and the frequentist concept of independent and identically distributed (iid) are detailed in online section A.6.

Informally, the idea of exchangeability is as follows. Suppose we assign a numerical index to each of the levels of a group (e.g., to each subject). When the levels are exchangeable, we can reassign the indices arbitrarily and lose no information; that is, the joint distribution will remain the same, because we don’t have any different prior information for each cluster (here, for each subject). In hierarchical models, we treat the levels of the group as exchangeable, and observations within each level in the group as also exchangeable. We generally include predictors at the level of the observations, those are the predictors that correspond to the experimental manipulations (e.g., attentional load, trial number, cloze probability, etc.); and maybe also at the group level, these are predictors that indicate characteristics of the levels in the group (e.g., the working memory capacity score of each subject). Then the conditional distributions given these explanatory variables would be exchangeable; that is, our predictors incorporate all the information that is not exchangeable, and when we factor the predictors out, the observations or units in the group are exchangeable. This is the reason why the item number is an appropriate cluster, but trial number is not: In the first case, if we permute the numbering of the items there is no loss of information because the indexes are exchangeable, all the information about the items is incorporated as predictors in the model. In the second case, the numbering of the trials include information that will be lost if we treat them as exchangeable. For example, consider the case where, as trial numbers increase, subjects get more experienced or fatigued. Even if we are not interested in the specific cluster-level estimates, hierarchical models allow us to generalize to the underlying population (subjects, items) from which the clusters in the sample were drawn. Of course, this generalization is only possible if the samples are representative (e.g., if the subjects and items are randomly drawn); strictly speaking, this condition often does not hold in experimental fields like psychology and linguistics. For more on exchangeability, consult the further reading at the end of the chapter.

Exchangeability is important in Bayesian statistics because of a theorem called the Representation Theorem (de Finetti 1931). This theorem states that if a sequence of random variables is exchangeable, then the prior distributions on the parameters in a model are a necessary consequence; priors are not merely an arbitrary addition to the frequentist modeling approach that we are familiar with. For a detailed discussion on the Representation Theorem, see chapter 4 of O’Hagan and Forster (2004).

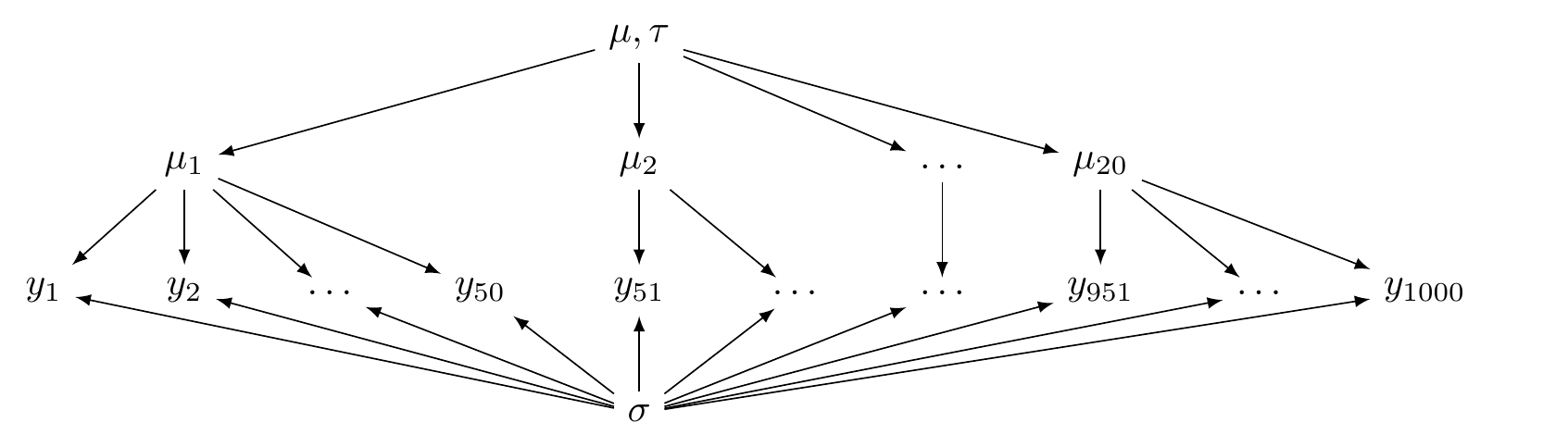

Furthermore, exchangeability has been shown (Bernardo and Smith 2009) to be mathematically equivalent to assuming a hierarchical structure in the model. The argument goes as follows. Suppose that the parameters for each level in a group are \(\mu_i\), where the levels are labeled \(i=1,\dots,I\). An example of groups is subjects. Suppose also that the data \(y_n\), where \(n=1,\dots,N\) are observations from these subjects (e.g., pupil size measurements). The data are assumed to be generated as

\[\begin{equation} y_n \sim \mathit{Normal}(\mu_{subj[n]},\sigma) \end{equation}\]

The notation \(subj[n]\), which roughly follows Gelman and Hill (2007), identifies the subject index. Suppose that each of 20 subjects provide 50 observations. If the data are ordered by subject id, then \(subj[1]\) to \(subj[50]\) corresponds to a subject with id \(i=1\), \(subj[51]\) to \(subj[100]\) corresponds to a subject with id \(i=2\), and so on.

We can code this representation in a straightforward way in R:

N_subj <- 20

N_obs_subj <- 50

N <- N_subj * N_obs_subj

df <- tibble(row = 1:N,

subj = rep(1:N_subj, each = N_obs_subj))

df## # A tibble: 1,000 × 2

## row subj

## <int> <int>

## 1 1 1

## 2 2 1

## 3 3 1

## # ℹ 997 more rows# Example:

c(df$subj[1], df$subj[2], df$subj[51])## [1] 1 1 2If the data \(y_n\) are exchangeable, the parameters \(\mu_i\) are also exchangeable. The fact that the \(\mu_i\) are exchangeable can be shown (Bernardo and Smith 2009) to be mathematically equivalent to assuming that they come from a common distribution, for example:

\[\begin{equation} \mu_i \sim \mathit{Normal}(\mu,\tau) \end{equation}\]

To make this more concrete, assume some completely arbitrary true values for the parameters, and generate observations based on a hierarchical process in R.

mu <- 100

tau <- 15

sigma <- 4

mu_i <- rnorm(N_subj, mu, tau)

df_h <- mutate(df, y = rnorm(N, mu_i[subj], sigma))

df_h## # A tibble: 1,000 × 3

## row subj y

## <int> <int> <dbl>

## 1 1 1 87.3

## 2 2 1 90.7

## 3 3 1 87.5

## # ℹ 997 more rowsThe parameters \(\mu\) and \(\tau\), called hyperparameters, are unknown and have prior distributions (hyperpriors) defined for them. This fact leads to a hierarchical relationship between the parameters: there is a common parameter \(\mu\) for each of the levels of a group (each of the subjects), and the parameters \(\mu_i\) are assumed to be generated as a function of this common parameter \(\mu\). Here, \(\tau\) represents between-group (here, between-subject) variability, and \(\sigma\) represents within-group (within-subject) variability. The three parameters have priors defined for them. The first two priors below are called hyperpriors.

- \(p(\mu)\)

- \(p(\tau)\)

- \(p(\sigma)\)

In such a model, information about \(\mu_i\) comes from two sources:

from each of the observed \(y_n\) corresponding to the respective \(\mu_{subj[n]}\) parameter, and

from the parameters \(\mu\) and \(\tau\) that led to all the other \(y_k\) (where \(k\neq n\)) being generated.

This is illustrated in Figure 5.1.

Fit this model in brms in the following way. For now, the prior distributions are arbitrary.

fit_h <- brm(y ~ 1 + (1 | subj), df_h,

prior =

c(prior(normal(50, 100), class = Intercept),

prior(normal(2, 5), class = sigma),

prior(normal(10, 10), class = sd)),

# increase iterations, adapt_delta to avoid convergence issues

iter = 5000,

control = list(adapt_delta = .99,

max_treedepth = 12))fit_h## ...

## Group-Level Effects:

## ~subj (Number of levels: 20)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS

## sd(Intercept) 15.13 2.54 11.05 21.32 1.01 564

## Tail_ESS

## sd(Intercept) 861

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 101.88 3.62 94.51 109.23 1.01 407 466

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 4.02 0.09 3.85 4.20 1.00 1809 2304

##

## ...In this output, Intercept corresponds to the posterior of \(\mu\), sigma to \(\sigma\), and sd(Intercept) to \(\tau\). There is more information in the brms object: we can also get the posteriors for each level of our group (i.e., for each subject). However, rather than estimating \(\mu_i\), brms estimates the adjustments to \(\mu\), \(u_i\), named r_subj[i,Intercept], so that \(\mu_i = \mu + u_i\). See the code below.

# Extract the posterior estimates of u_i

u_i_post <- as_draws_df(fit_h) %>%

select(starts_with("r_subj"))

# Extract the posterior estimate of mu

mu_post <- as_draws_df(fit_h)$b_Intercept

# Build the posterior estimate of mu_i

mu_i_post <- mu_post + u_i_post

colMeans(mu_i_post) %>% unname()## [1] 91.8 96.3 123.2 101.0 102.0 126.4 107.0 80.4 90.3 93.4 118.9

## [12] 104.5 106.0 101.0 92.7 126.5 107.7 71.4 110.3 93.0# Compare with true values

mu_i## [1] 91.6 96.5 123.4 101.1 101.9 125.7 106.9 81.0 89.7 93.3 118.4

## [12] 105.4 106.0 101.7 91.7 126.8 107.5 70.5 110.5 92.9

FIGURE 5.1: A directed acyclic graph illustrating a hierarchical model (partial pooling).

There are two other configurations possible that do not involve this hierarchical structure and which represent two alternative, extreme scenarios.

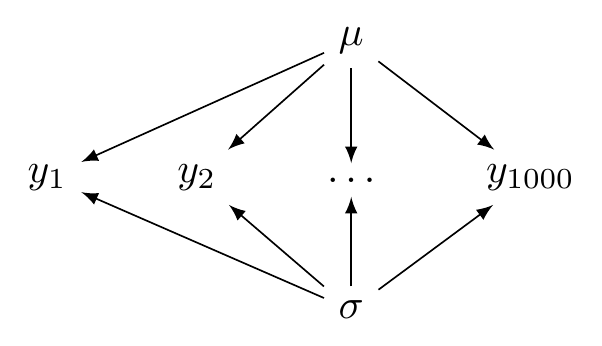

One of these two configurations is called the complete pooling model, Here, the data \(y_n\) are assumed to be generated from a single distribution:

\[\begin{equation} y_n \sim \mathit{Normal}(\mu,\sigma). \end{equation}\]

This model is an intercept only regression similar to what we saw in chapter 3.

Generate simulated observations in a vector y based on arbitrary true values in R in the following way.

sigma <- 4

mu <- 100

df_cp <- mutate(df, y = rnorm(N, mu, sigma))

df_cp## # A tibble: 1,000 × 3

## row subj y

## <int> <int> <dbl>

## 1 1 1 99.3

## 2 2 1 99.3

## 3 3 1 106.

## # ℹ 997 more rowsFit it in brms.

fit_cp <- brm(y ~ 1, df_cp,

prior =

c(prior(normal(50, 200), class = Intercept),

prior(normal(2, 5), class = sigma)))fit_cp## ...

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 100.15 0.12 99.90 100.39 1.00 3355 2176

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 4.00 0.09 3.83 4.17 1.00 3038 2630

##

## ...The configuration of the complete pooling model is illustrated in Figure 5.2.

FIGURE 5.2: A directed acyclic graph illustrating a complete pooling model.

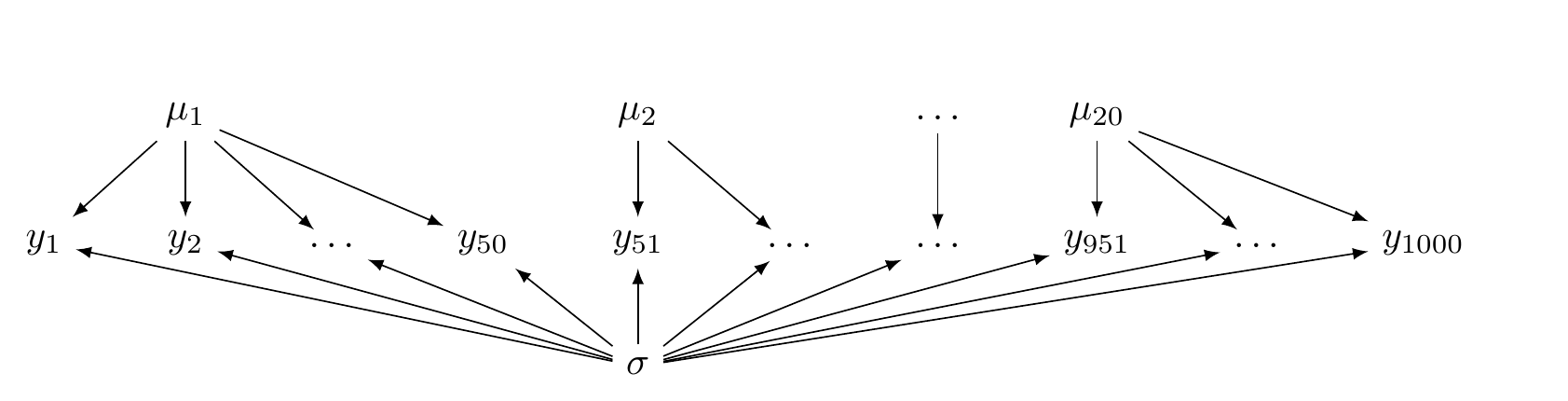

The other configuration is called the no pooling model; here, each \(y_n\) is assumed to be generated from an independent distribution:

\[\begin{equation} y_n \sim \mathit{Normal}(\mu_{subj[n]},\sigma) \end{equation}\]

Generate simulated observations from the no pooling model in R with arbitrary true values.

sigma <- 4

mu_i <- c(156, 178, 95, 183, 147, 191, 67, 153, 129, 119, 195,

150, 172, 97, 110, 115, 78, 126, 175, 80)

df_np <- mutate(df, y = rnorm(N, mu_i[subj], sigma))

df_np## # A tibble: 1,000 × 3

## row subj y

## <int> <int> <dbl>

## 1 1 1 157.

## 2 2 1 160.

## 3 3 1 164.

## # ℹ 997 more rowsFit the no pooling model in brms. By using the formula 0 + factor(subj), the common intercept can be removed and the model is forced to estimate one intercept for each subj separately. The column subj is converted to a factor so that brms does not interpret it as a number.

fit_np <- brm(y ~ 0 + factor(subj), df_np,

prior =

c(prior(normal(0, 200), class = b),

prior(normal(2, 5), class = sigma)))The summary shows now the 20 estimates of \(\mu_i\) as b_factorsubj and \(\sigma\). (We ignore lp__ and lprior.)

fit_np %>% posterior_summary()## Estimate Est.Error Q2.5 Q97.5

## b_factorsubj1 156.23 0.5559 155.16 157.34

## b_factorsubj2 176.73 0.5465 175.65 177.77

## b_factorsubj3 94.40 0.5520 93.28 95.50

## b_factorsubj4 183.08 0.5444 182.01 184.15

## b_factorsubj5 145.88 0.5676 144.76 146.98

## b_factorsubj6 191.10 0.5484 190.04 192.16

## b_factorsubj7 67.05 0.5490 65.95 68.10

## b_factorsubj8 152.43 0.5481 151.36 153.52

## b_factorsubj9 129.12 0.5569 128.04 130.20

## b_factorsubj10 119.74 0.5663 118.64 120.84

## b_factorsubj11 195.12 0.5655 193.99 196.22

## b_factorsubj12 150.11 0.5546 149.02 151.19

## b_factorsubj13 172.41 0.5442 171.31 173.45

## b_factorsubj14 95.42 0.5535 94.36 96.52

## b_factorsubj15 110.17 0.5600 109.10 111.24

## b_factorsubj16 115.25 0.5580 114.15 116.35

## b_factorsubj17 78.52 0.5574 77.43 79.61

## b_factorsubj18 125.61 0.5639 124.50 126.71

## b_factorsubj19 175.20 0.5566 174.12 176.26

## b_factorsubj20 81.14 0.5579 80.05 82.27

## sigma 3.91 0.0885 3.74 4.09

## lprior -131.51 0.0109 -131.53 -131.49

## lp__ -2912.04 3.3595 -2919.76 -2906.44Unlike the hierarchical model, now there is no common distribution that generates the \(\mu_i\) parameters. This is illustrated in Figure 5.3.

FIGURE 5.3: A directed acyclic graph illustrating a no pooling model.

The hierarchical model lies between these two extremes and for this reason is sometimes called a partial pooling model. One way that the hierarchical model is often described is that the estimates \(\mu_i\) “borrow strength” from the parameter \(\mu\) (which represents the grand mean in the above example).

An important practical consequence of partial pooling is the idea of “borrowing strength from the mean”: if we have very sparse data from a particular member of a group (e.g., missing data from a particular subject), the estimate \(\mu_i\) of that particular group member \(n\) is determined by the parameter \(\mu\). In other words, when the data are sparse for group member \(n\), the posterior estimate \(\mu_i\) is determined largely by the posterior on \(\mu\). In this sense, even the frequentist hierarchical modeling software in R, lmer() from the package lme4, is essentially Bayesian in formulation (except of course that there is no prior on \(\mu\)).

So far we have focused on the structure of \(\mu\), the location parameter of the likelihood. We could even have partial pooling, complete pooling or no pooling with respect to \(\sigma\), the scale parameter of the likelihood. More generally, any parameter of a likelihood can have any of these kinds of pooling.

In the coming sections, we will be looking at each of these models with more detail and using realistic examples.

5.2 A hierarchical model with a normal likelihood: The N400 effect

Event-related potentials (ERPs) allow scientists to observe electrophysiological responses in the brain measured by means of electroencephalography (EEG) that are time-locked to a specific event (i.e., the presentation of the stimuli). A very robust ERP effect in the study of language is the N400. Words with low predictability are accompanied by an N400 effect in comparison with high-predictable words, this is a relative negativity that peaks around 300-500 ms after word onset over central parietal scalp sites (first reported in Kutas and Hillyard 1980, for semantic anomalies, and in 1984 for low predictable word; for a review, see Kutas and Federmeier 2011). The N400 is illustrated in Figure 5.4.

FIGURE 5.4: Typical ERPs for the grand average across the N400 spatial window (central parietal electrodes: Cz, CP1, CP2, P3, Pz, P4, POz) for high and low predictability nouns (specifically from the constraining context of the experiment reported in Nicenboim, Vasishth, and Rösler 2020). The x-axis indicates time in seconds and the y-axis indicates voltage in microvolts (unlike many EEG/ERP plots, negative polarity is plotted downwards).

For example, in (1) below, the continuation ‘dog’ has lower predictability than the continuation ‘paint,’ and thus we would expect a more negative signal, that is, an N400 effect, in ‘dog’ in (b) in comparison with ‘paint’ in (a). Predictability is often measured with a cloze task (see section 1.4).

- Example from Kutas and Hillyard (1984)

- Don’t touch the wet paint.

- Don’t touch the wet dog.

The EEG data are typically recorded in tens of electrodes every couple of milliseconds, but for our purposes (i.e., for learning about Bayesian hierarchical models), we can safely ignore the complexity of the data. A common way to simplify the high-dimensional EEG data when we are dealing with the N400 is to focus on the average amplitude of the EEG signal at the typical spatio-temporal window of the N400 (for example, see Frank et al. 2015).

For this example, we are going to focus on the N400 effect for critical nouns from a subset of the data of Nieuwland et al. (2018). Nieuwland et al. (2018) presented a replication attempt of an original experiment of DeLong, Urbach, and Kutas (2005) with sentences like (2).

- Example from DeLong, Urbach, and Kutas (2005)

- The day was breezy so the boy went outside to fly a kite.

- The day was breezy so the boy went outside to fly an airplane.

We’ll ignore the goal of original experiment (DeLong, Urbach, and Kutas 2005), and its replication (Nieuwland et al. 2018). We are going to focus on the N400 at the final nouns in the experimental stimuli. In example (2), the final noun ‘kite’ has higher predictability than ‘airplane,’ and thus we would expect a more negative signal in ‘airplane’ in (b) in comparison with ‘kite’ in (a).

To speed up computation, we restrict the data set of Nieuwland et al. (2018) to the subjects from the Edinburgh lab. This subset of the data can be found in df_eeg in the bcogsci package. Center the cloze probability before using it as a predictor.

data("df_eeg")

(df_eeg <- df_eeg %>%

mutate(c_cloze = cloze - mean(cloze)))## # A tibble: 2,863 × 7

## subj cloze item n400 cloze_ans N c_cloze

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 0 1 7.08 0 44 -0.476

## 2 1 0.03 2 -0.68 1 44 -0.446

## 3 1 1 3 1.39 44 44 0.524

## # ℹ 2,860 more rows# Number of subjects

df_eeg %>%

distinct(subj) %>%

count()## # A tibble: 1 × 1

## n

## <int>

## 1 37One convenient aspect of using averages of EEG data is that they are roughly normally distributed. This allows us to use the normal likelihood. Figure 5.5 shows the distribution of the data.

df_eeg %>% ggplot(aes(n400)) +

geom_histogram(binwidth = 4,

colour = "gray",

alpha = .5,

aes(y = after_stat(density))) +

stat_function(fun = dnorm,

args = list(mean = mean(df_eeg$n400),

sd = sd(df_eeg$n400))) +

xlab("Average voltage in microvolts for

the N400 spatiotemporal window")FIGURE 5.5: Histogram of the N400 averages for every trial, overlaid is a density plot of a normal distribution.

5.2.1 Complete pooling model (\(M_{cp}\))

We’ll start from the simplest model which is basically the linear regression we encountered in the preceding chapter.

5.2.1.1 Model assumptions

This model, call it \(M_{cp}\), makes the following assumptions.

- The EEG averages for the N400 spatiotemporal window are normally distributed.

- Observations are independent.

- There is a linear relationship between cloze and the EEG signal for the trial.

This model is incorrect for these data due to assumption (2) being violated.

With the last assumption, we are saying that the difference in the average signal when we compare nouns with cloze probability of \(0\) and \(0.1\) is the same as the difference in the signal when we compare nouns with cloze values of \(0.1\) and \(0.2\) (or \(0.9\) and \(1\)). This is just an assumption, and it may not necessarily be the case in the actual data. This means that we are going to get a posterior for \(\beta\) conditional on the assumption that the linear relationship holds. Even if the assumption approximately holds, we still don’t know how much we deviate from this assumption.

We can now decide on a likelihood and priors.

5.2.1.2 Likelihood and priors

A normal likelihood seems reasonable for these data:

\[\begin{equation} signal_n \sim \mathit{Normal}( \alpha + c\_cloze_n \cdot \beta,\sigma) \tag{5.1} \end{equation}\]

where \(n =1, \ldots, N\), and \(signal\) is the dependent variable (average signal in the N400 spatiotemporal window in microvolts). The variable \(N\) represents the total number of data points.

As always, we need to rely on our previous knowledge and domain expertise to decide on priors. We know that ERPs (signals time-locked to a stimulus) have mean amplitudes of a couple of microvolts: This is easy to see in any plot of the EEG literature. This means that we don’t expect the effect of our manipulation to exceed, say, \(10 \mu V\). As before, a priori we’ll assume that effects can be negative or positive. We can quantify our prior knowledge regarding plausible values of \(\beta\) as normally distributed, centered at zero with a standard deviation of \(10 \mu V\). (Other values such as \(5 \mu V\) would have been equally reasonable, since this smaller standard deviation would entail that 95% of the prior mass probability is between \(-10\) and \(10 \mu V\).)

If the signal for each ERP is baselined, that is, the mean signal of a time window before the time window of interest is subtracted from the time window of interest, then the mean signal would be relatively close to \(0\). Since we know that the ERPs were baselined in this study, we expect that the grand mean of our signal should be relatively close to zero. Our prior for \(\alpha\) is then also normally distributed, and centered at zero with a standard deviation of \(10 \mu V\).

The prior for the standard deviation \(\sigma\) of our signal distribution requires some thought. We know that EEG signals are quite noisy, and that the standard deviation must be higher than zero. Our prior for \(\sigma\) is a truncated normal distribution with location zero and scale 50. Recall that since we truncate the distribution, the parameters location and scale do not correspond to the mean and standard deviation of the new distribution; see online section A.2.

We can draw random samples from this truncated distribution and calculate their mean and standard deviation:

samples <- rtnorm(20000, mean = 0, sd = 50, a = 0)

c(mean = mean(samples), sd = sd(samples))## mean sd

## 39.9 30.2So we are essentially saying that we assume a priori that we will find the true standard deviation of the signal in the following interval with 95% probability:

quantile(samples, probs = c(0.025, 0.975))## 2.5% 97.5%

## 1.51 112.07# Analytically:

# c(qtnorm(0.025, 0, 50, a = 0), qtnorm(0.975, 0, 50, a = 0))To sum up, we are going to use the following priors:

\[\begin{equation} \begin{aligned} \alpha &\sim \mathit{Normal}(0,10)\\ \beta &\sim \mathit{Normal}(0,10)\\ \sigma &\sim \mathit{Normal}_{+}(0,50) \end{aligned} \end{equation}\]

A model such as \(M_{cp}\) is sometimes called a fixed-effects model: all the parameters are fixed in the sense that do not vary from subject to subject or from item to item. A similar frequentist model would correspond to fitting a simple linear model using the lm() function: lm(n400 ~ 1 + c_cloze, data = df_eeg).

We fit this model in brms as follows (the default family is gaussian() so we can omit it). As with the lm() function in R, by default an intercept is fitted and thus n400 ~ c_cloze is equivalent to n400 ~ 1 + c_cloze:

fit_N400_cp <-

brm(n400 ~ c_cloze,

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b, coef = c_cloze),

prior(normal(0, 50), class = sigma)),

data = df_eeg)For now, check the summary, and plot the posteriors of the model (Figure 5.6).

fit_N400_cp## ...

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 3.66 0.23 3.21 4.09 1.00 4067 2707

## c_cloze 2.25 0.56 1.13 3.35 1.00 4376 2968

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 11.82 0.16 11.53 12.13 1.00 5295 3331

##

## ...plot(fit_N400_cp)

FIGURE 5.6: Posterior distributions of the complete pooling model, fit_N400_cp.

5.2.2 No pooling model (\(M_{np}\))

One of the assumptions of the previous model is clearly wrong: observations are not independent, they are clustered by subject (and also by the specific item, but we’ll ignore this until section 5.2.4). It is reasonable to assume that EEG signals are more similar within subjects than between them. The following model assumes that each subject is completely independent from each other.20

5.2.2.1 Model assumptions

- EEG averages for the N400 spatio-temporal window are normally distributed.

- Every subject’s model is fit independently of the other subjects; the subjects have no parameters in common (an exception is the standard deviation, \(\sigma\); this is the same for all subjects in Equation (5.2)).

- There is a linear relationship between cloze and the EEG signal for the trial.

What likelihood and priors can we choose here?

5.2.2.2 Likelihood and priors

The likelihood is a normal distribution as before:

\[\begin{equation} signal_n \sim \mathit{Normal}( \alpha_{subj[n]} + c\_cloze_n \cdot \beta_{subj[n]},\sigma) \tag{5.2} \end{equation}\]

As before, \(n\) represents each observation, that is, the \(n\)th row in the data frame, which has \(N\) rows, and now the index \(i\) identifies the subject. The notation \(subj[n]\), which roughly follows Gelman and Hill (2007), identifies the subject index; for example, if \(subj[10]=3\), then the \(10\)th row of the data frame is from subject \(3\).

We define the priors as follows:

\[\begin{equation} \begin{aligned} \alpha_{i} &\sim \mathit{Normal}(0,10)\\ \beta_{i} &\sim \mathit{Normal}(0,10)\\ \sigma &\sim \mathit{Normal}_+(0,50) \end{aligned} \end{equation}\]

In brms, such a model can be fit by removing the common intercept with the formula n400 ~ 0 + factor(subj) + c_cloze:factor(subj).

This formula forces the model to estimate one intercept and one slope for each level of subj.21

The by-subject intercepts are indicated with factor(subj) and the by-subject slopes with c_cloze:factor(subj).

It’s very important to specify that subject should be treated as a factor and not as a numerical variable; we don’t assume that subject number 3 will show 3 times more positive (or negative) average signal than subject number 1! The model fits 37 independent intercepts and 37 independent slopes. By setting a prior to class = b and omitting coef, we are essentially setting identical priors to all the intercepts and slopes of the model. The parameters are independent from each other; it is only our previous knowledge (or prior beliefs) about their possible values (encoded in the priors) that is identical. We can set different priors to each intercept and slope, but that will mean setting 74 priors!

fit_N400_np <- brm(n400 ~ 0 + factor(subj) + c_cloze:factor(subj),

prior = c(prior(normal(0, 10), class = b),

prior(normal(0, 50), class = sigma)),

data = df_eeg)For this model, printing a summary means printing the 75 parameters (\(\alpha_{1,...,37}\), \(\beta_{1,...,37}\), and \(\sigma\)). We could do this as always by printing out the model results: just type fit_N400_np.

It may be easier to understand the output of the model by plotting \(\beta_{1,..,37}\) using bayesplot. (brms also includes a wrapper for this function called stanplot.) We can take a look at the internal names that brms gives to the parameters with variables(fit_N400_np); they are b_factorsubj, then the subject index and then :c_cloze. The code below changes the subject labels back to their original numerical indices and plots them in Figure 5.7. The subjects are ordered by the magnitude of their mean effects.

The model \(M_{np}\) does not estimate a unique population-level effect; instead, there is a different effect estimated for each subject. However, given the posterior means from each subject, it is still possible to calculate the average of these estimates \(\hat\beta_{1,...,I}\), where \(I\) is the total number of subjects:

# parameter name of beta by subject:

ind_effects_np <- paste0("b_factorsubj",

unique(df_eeg$subj), ":c_cloze")

beta_across_subj <- as.data.frame(fit_N400_np) %>%

#removes the meta data from the object

select(all_of(ind_effects_np)) %>%

rowMeans()

# Calculate the average of these estimates

(grand_av_beta <- tibble(mean = mean(beta_across_subj),

lq = quantile(beta_across_subj, c(0.025)),

hq = quantile(beta_across_subj, c(0.975))))## # A tibble: 1 × 3

## mean lq hq

## <dbl> <dbl> <dbl>

## 1 2.17 1.14 3.16In Figure 5.7, the 95% credible interval of this overall mean effect is plotted as two vertical lines together with the effect of cloze probability for each subject (ordered by effect size). Here, rather than using a plotting function from brms, we can extract the summary of by-subject effects, reorder them by magnitude, and then plot the summary with a custom plot using ggplot2.

# make a table of beta's by subject

beta_by_subj <- posterior_summary(fit_N400_np,

variable = ind_effects_np) %>%

as.data.frame() %>%

mutate(subject = 1:n()) %>%

## reorder plot by magnitude of mean:

arrange(Estimate) %>%

mutate(subject = factor(subject, levels = subject))The code below generates Figure 5.7.

ggplot(beta_by_subj,

aes(x = Estimate, xmin = Q2.5, xmax = Q97.5, y = subject)) +

geom_point() +

geom_errorbarh() +

geom_vline(xintercept = grand_av_beta$mean) +

geom_vline(xintercept = grand_av_beta$lq, linetype = "dashed") +

geom_vline(xintercept = grand_av_beta$hq, linetype = "dashed") +

xlab("By-subject effect of cloze probability in microvolts")

FIGURE 5.7: 95% credible intervals of the effect of cloze probability for each subject according to the no pooling model, fit_N400_np. The solid vertical line represents the mean over all the subjects; and the broken vertical lines mark the 95% credible interval for this mean.

5.2.4 Correlated varying intercept varying slopes model (\(M_{h}\))

The model \(M_{v}\) allowed for differences in intercepts (mean voltage) and slopes (effects of cloze) across subjects, but it has the implicit assumption that these varying intercepts and varying slopes are independent. It is in principle possible that subjects showing more negative voltage may also show stronger effects (or weaker effects). Next, we fit a model that allows a correlation between the intercepts and slopes. We model the correlation between varying intercepts and slopes by defining a variance-covariance matrix \(\boldsymbol{\Sigma}\) between the by-subject varying intercepts and slopes, and by assuming that both adjustments (intercept and slope) come from a multivariate (in this case, a bivariate) normal distribution.

- In \(M_h\), we model the EEG data with the following assumptions:

- EEG averages for the N400 spatio-temporal window are normally distributed.

- Some aspects of the mean signal voltage and of the effect of predictability depend on the subject, and these two might be correlated, i.e., we assume group-level intercepts and slopes, and allow a correlation between them by-subject.

- There is a linear relationship between cloze and the EEG signal for the trial.

The likelihood remains identical to the model \(M_v\), which assumes no correlation between group-level intercepts and slopes (section 5.2.3):

\[\begin{equation} signal_n \sim \mathit{Normal}(\alpha + u_{subj[n],1} + c\_cloze_n \cdot (\beta + u_{subj[n],2}),\sigma) \end{equation}\]

The correlation is indicated in the priors on the adjustments for intercept \(u_{1}\) and slopes \(u_{2}\).

- Priors: \[\begin{equation} \begin{aligned} \alpha & \sim \mathit{Normal}(0,10) \\ \beta & \sim \mathit{Normal}(0,10) \\ \sigma &\sim \mathit{Normal}_+(0,50)\\ {\begin{pmatrix} u_{i,1} \\ u_{i,2} \end{pmatrix}} &\sim {\mathcal {N}} \left( {\begin{pmatrix} 0\\ 0 \end{pmatrix}} ,\boldsymbol{\Sigma_u} \right) \end{aligned} \end{equation}\]

In this model, a bivariate normal distribution generates the varying intercepts and varying slopes \(\mathbf{u}\); this is an \(n\times 2\) matrix. The variance-covariance matrix \(\boldsymbol{\Sigma_u}\) defines the standard deviations of the varying intercepts and varying slopes, and the correlation between them. Recall from section 1.6.2 that the diagonals of the variance-covariance matrix contain the variances of the correlated random variables, and the off-diagonals contain the covariances. In this example, the covariance \(Cov(u_1,u_2)\) between two variables \(u_1\) and \(u_2\) is defined as the product of their correlation \(\rho\) and their standard deviations \(\tau_{u_1}\) and \(\tau_{u_2}\). In other words, \(Cov(u_1,u_2) = \rho_u \tau_{u_1} \tau_{u_2}\).

\[\begin{equation} \boldsymbol{\Sigma_u} = {\begin{pmatrix} \tau_{u_1}^2 & \rho_u \tau_{u_1} \tau_{u_2} \\ \rho_u \tau_{u_1} \tau_{u_2} & \tau_{u_2}^2 \end{pmatrix}} \end{equation}\]

In order to specify a prior for \(\Sigma_u\), we need priors for the standard deviations, \(\tau_{u_1}\), and \(\tau_{u_2}\), and also for their correlation, \(\rho_u\). We can use the same priors for \(\tau\) as before. For the correlation matrix that contains \(\rho_u\), we use the LKJ prior. The basic idea of the LKJ prior on the correlation matrix is that as its parameter, \(\eta\) (eta), increases, it will favor correlations closer to zero.25 At \(\eta = 1\), the LKJ correlation distribution is uninformative (if there is a single correlation parameter, this is similar to \(\mathit{Beta}(1,1)\), scaled to the interval \([-1,1]\)), at \(\eta < 1\), it favors extreme correlations (similar to \(\mathit{Beta}(a<1,b<1)\)). We set \(\eta = 2\) so that we don’t favor extreme correlations, and we still represent our lack of knowledge through the wide spread of the prior between \(-1\) and \(1\). Thus, \(\eta = 2\) gives us a regularizing, relatively uninformative or mildly informative prior.

Figure 5.11 shows a visualization of different parameterizations of the LKJ prior.

FIGURE 5.11: Visualization of the LKJ correlation distribution prior with four different values of the \(\eta\) parameter.

\[\begin{equation} \begin{aligned} \tau_{u_1} &\sim \mathit{Normal}_+(0,20)\\ \tau_{u_2} &\sim \mathit{Normal}_+(0,20)\\ \begin{bmatrix} 1 & \rho_u \\ \rho_u & 1 \end{bmatrix} &\sim \mathit{LKJcorr}(2) \end{aligned} \end{equation}\]

In our brms model, we allow a correlation between the by-subject intercepts and slopes by using a single pipe | instead of the double pipe || that we used previously. This convention follows the syntax used in the frequentist lmer() function. As before, the varying intercepts are implicitly fit.

Because we have a new parameter, the correlation \(\rho_{u}\), we need to add a new prior for this correlation. In brms, this is achieved by adding a prior for the parameter type cor. This is not obvious from the brms syntax, but the LKJ prior is being defined on the correlation matrix, not the correlation \(\rho_{u}\).

prior_h <- c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b, coef = c_cloze),

prior(normal(0, 50), class = sigma),

prior(normal(0, 20),

class = sd, coef = Intercept,

group = subj),

prior(normal(0, 20),

class = sd, coef = c_cloze,

group = subj),

prior(lkj(2), class = cor, group = subj))

fit_N400_h <- brm(n400 ~ c_cloze + (c_cloze | subj),

prior = prior_h,

data = df_eeg)The estimates do not change much in comparison with the varying intercept and varying slope model, probably because the estimation of the correlation is quite poor (i.e., there is a lot of uncertainty in the posterior). While the inclusion of the correlation parameter is justified, this results, in our experience, in a slower convergence of the models (see also the discussion at the end of the section 5.2.6).

As before, the estimates are shown graphically, in Figure 5.12. As always, one can access the complete summary by typing fit_N400_h.

plot(fit_N400_h, nvariables = 6)

FIGURE 5.12: The posteriors of the parameters in the model fit_N400_h.

We are now half-way to what is sometimes called the “maximal” hierarchical model (Barr et al. 2013). This usually refers to a model with all the by-participant and by-items group-level variance components allowed by the experimental design and a full variance covariance matrix for all the group-level parameters. Not all variance components are allowed by the experimental design; in particular, between-group manipulations cannot have variance components. For example, even if we assume that the working memory capacity of the subjects might affect the N400, we cannot measure how working memory affects the subjects differently.

When we refer to a full variance-covariance matrix, we mean a variance-covariance matrix where all the elements (variances and covariances) are non-zero. In our previous model, for example, the variance-covariance matrix \(\boldsymbol{\Sigma_u}\) was full because no element was zero. If we assume no correlation between group-level intercept and slope, there would need to be zeros in the diagonal of the matrix, and this would render the model identical to \(M_{v}\) defined in section 5.2.3. If we also assume that the bottom right element (\(\tau^2\)) is zero, the model would turn into a varying intercept model (in brms formula n400 ~ c_cloze + (1 | subj)); and if we assume that the variance-covariance matrix has zeros in every cell, the model would turn into a complete pooling model, \(M_{cp}\), as defined in section 5.2.1.

As we will see in section 5.2.6 and in the online chapter F, “maximal” is probably a misnomer for Bayesian models, since this mostly refers to limitations of the popular frequentist package for fitting models, lme4.

The next section spells out a model with full variance-covariance matrix for both subjects and items-level effects.

5.2.5 By-subjects and by-items correlated varying intercept varying slopes model (\(M_{sih}\))

Our new model, \(M_{sih}\), will allow for differences in intercepts (mean voltage) and slopes (effects of predictability) across subjects and across items. In typical Latin square designs, subjects and items are said to be crossed random effects—each subject sees exactly one instance of each item. Here we assume a possible correlation between varying intercepts and slopes by subjects, as well as a correlation between varying intercepts and slopes by items.

- In \(M_{sih}\), we model the EEG data with the following assumptions:

EEG averages for the N400 spatio-temporal window are normally distributed.

Some aspects of the mean signal voltage and of the effect of predictability depend on the subject, i.e., we assume group-level intercepts, and slopes, and a correlation between them by-subject.

Some aspects of the mean signal voltage and of the effect of predictability depend on the item, i.e., we assume group-level intercepts, and slopes, and a correlation between them by-item.

There is a linear relationship between cloze and the EEG signal for the trial.

- Likelihood:

\[\begin{multline} signal_n \sim \mathit{Normal}(\alpha + u_{subj[n],1} + w_{item[n],1} + \\ c\_cloze_n \cdot (\beta + u_{subj[n],2}+ w_{item[n],2}), \sigma) \end{multline}\]

- Priors: \[\begin{equation} \begin{aligned} \alpha & \sim \mathit{Normal}(0,10) \\ \beta & \sim \mathit{Normal}(0,10) \\ \sigma &\sim \mathit{Normal}_+(0,50)\\ {\begin{pmatrix} u_{i,1} \\ u_{i,2} \end{pmatrix}} &\sim {\mathcal {N}} \left( {\begin{pmatrix} 0\\ 0 \end{pmatrix}} ,\boldsymbol{\Sigma_u} \right) \\ {\begin{pmatrix} w_{j,1} \\ w_{j,2} \end{pmatrix}} &\sim {\mathcal {N}} \left( {\begin{pmatrix} 0\\ 0 \end{pmatrix}} ,\boldsymbol{\Sigma_w} \right) \end{aligned} \end{equation}\]

The index \(j\) represents the item id; this is analogous to the index \(i\) for subjects. Similarly, \(item[n]\) indicates the item that corresponds to the observation in the \(n\)-th row of the data frame.

We have hyperparameters and hyperpriors as before:

\[\begin{equation} \begin{aligned} \boldsymbol{\Sigma_u} & = {\begin{pmatrix} \tau_{u_1}^2 & \rho_u \tau_{u_1} \tau_{u_2} \\ \rho_u \tau_{u_1} \tau_{u_2} & \tau_{u_2}^2 \end{pmatrix}}\\ \boldsymbol{\Sigma_w} & = {\begin{pmatrix} \tau_{w_1}^2 & \rho_w \tau_{w_1} \tau_{w_2} \\ \rho_w \tau_{w_1} \tau_{w_2} & \tau_{w_2}^2 \end{pmatrix}} \end{aligned} \end{equation}\]

\[\begin{equation} \begin{aligned} \tau_{u_1} &\sim \mathit{Normal}_+(0,20)\\ \tau_{u_2} &\sim \mathit{Normal}_+(0,20)\\ \begin{bmatrix} 1 & \rho_u \\ \rho_u & 1 \end{bmatrix} \sim \mathit{LKJcorr}(2)\\ \tau_{w_1} &\sim \mathit{Normal}_+(0,20)\\ \tau_{w_2} &\sim \mathit{Normal}_+(0,20)\\ \begin{bmatrix} 1 & \rho_w \\ \rho_w & 1 \end{bmatrix} \sim \mathit{LKJcorr}(2)\\ \end{aligned} \end{equation}\]

We set identical priors for by-items group-level effects as for by-subject group-level effects, but this is only because we don’t have any differentiated prior information about subject-level vs. item-level variation. However, bear in mind that the estimation for items is completely independent from the estimation for subjects. Although we wrote many more equations than before, the brms model is quite straightforward to extend.

We assign the priors to the by-subject and by-item parameters first:

prior_sih_full <-

c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b, coef = c_cloze),

prior(normal(0, 50), class = sigma),

prior(normal(0, 20),

class = sd, coef = Intercept,

group = subj),

prior(normal(0, 20),

class = sd, coef = c_cloze,

group = subj),

prior(lkj(2), class = cor, group = subj),

prior(normal(0, 20),

class = sd, coef = Intercept,

group = item),

prior(normal(0, 20),

class = sd, coef = c_cloze,

group = item),

prior(lkj(2), class = cor, group = item))We can also simplify the priors, when we assign the same priors to the by-subject and by-item parameters:

prior_sih <-

c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(normal(0, 50), class = sigma),

prior(normal(0, 20), class = sd),

prior(lkj(2), class = cor))Fit the model:

fit_N400_sih <- brm(n400 ~ c_cloze + (c_cloze | subj) +

(c_cloze | item),

prior = prior_sih,

data = df_eeg)We have new group-level effects in the summary if we run fit_N400_sih, but again the estimate of the effect of cloze remains virtually unchanged (Figure 5.13).

fit_N400_sih## ...

## Group-Level Effects:

## ~item (Number of levels: 80)

## Estimate Est.Error l-95% CI u-95% CI Rhat

## sd(Intercept) 1.51 0.35 0.81 2.18 1.00

## sd(c_cloze) 2.31 1.03 0.26 4.30 1.01

## cor(Intercept,c_cloze) -0.42 0.32 -0.90 0.32 1.00

## Bulk_ESS Tail_ESS

## sd(Intercept) 1459 1717

## sd(c_cloze) 1040 1236

## cor(Intercept,c_cloze) 2299 2353

##

## ~subj (Number of levels: 37)

## Estimate Est.Error l-95% CI u-95% CI Rhat

## sd(Intercept) 2.21 0.37 1.55 3.02 1.00

## sd(c_cloze) 1.47 0.89 0.08 3.28 1.00

## cor(Intercept,c_cloze) 0.13 0.35 -0.60 0.77 1.00

## Bulk_ESS Tail_ESS

## sd(Intercept) 1433 2064

## sd(c_cloze) 1131 1446

## cor(Intercept,c_cloze) 4318 2764

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 3.66 0.46 2.72 4.54 1.00 2108 2604

## c_cloze 2.31 0.70 0.92 3.63 1.00 3998 3250

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 11.49 0.16 11.18 11.79 1.00 5701 2832

##

## ...plot(fit_N400_sih, nvariables = 9)

FIGURE 5.13: The posterior distributions of the parameters in the model fit_N400_sih.

5.2.6 Beyond the maximal model–Distributional regression models

We can use posterior predictive checks to verify that our last model can capture the entire signal distribution. This is shown in Figure 5.14.

pp_check(fit_N400_sih, ndraws = 50, type = "dens_overlay")

FIGURE 5.14: Overlay of densities from the posterior predictive distributions of the model fit_N400_sih.

However, we know that in ERP studies, large levels of impedance between the recording electrodes and the skin tissue increase the noise in the recordings (Picton et al. 2000). Given that skin tissue is different between subjects, it could be the case that the level of noise varies by subject. It might be a good idea to verify that our model is good enough for capturing the by-subject variability. The code below produces Figure 5.15.

pp_check(fit_N400_sih,

type = "dens_overlay_grouped",

ndraws = 100,

group = "subj") +

xlab("Signal in the N400 spatiotemporal window") +

theme(legend.position = "inside",

legend.position.inside = c(.5,.08))FIGURE 5.15: The plot shows 100 predicted distributions with the label \(y_{rep}\) and the distribution of the average signal data with the label \(y\) density plots for the 37 subjects that participated in the experiment.

Figure 5.15 hints that we might be misfitting some subjects: Some of the by-subject observed distributions of the EEG signal averages look much tighter than their corresponding posterior predictive distributions (e.g., subjects 3, 5, 9, 10, 14), whereas some other by-subject observed distributions look wider (e.g., subjects 25, 26, 27). Another approach to examine whether we misfit the by-subject noise level is to plot posterior distributions of the standard deviations and compare them with the observed standard deviation. This is achieved in the following code, which groups the data by subject, and shows the distribution of standard deviations. The result is shown in Figure 5.16. It is clear now that, for some subjects, the observed standard deviation lies outside the bulk of the distribution of predictive standard deviations.

pp_check(fit_N400_sih,

type = "stat_grouped",

ndraws = 1000,

group = "subj",

stat = "sd",

facet_args = list(scales = "fixed")) +

scale_x_continuous(breaks = c(8, 12, 16), limits = c(7,19)) +

theme(legend.position = "inside",

legend.position.inside = c(.5,.08))FIGURE 5.16: Distribution of posterior predicted standard deviations in gray and observed standard deviation in black lines by subject.

Why is our “maximal” hierarchical model misfitting the by-subject distribution of data? This is because, the maximal models are, in general and implicitly, models with the maximal group-level effect structure for the location parameter (e.g., the mean, \(\mu\), in a normal model). Other parameters (e.g., scale or shape parameters) are estimated as auxiliary parameters, and are assumed to be constant across observations and clusters. This assumption is so common that researchers may not be aware that it is just an assumption. In the Bayesian framework, it is easy to change such default assumptions if necessary. Changing the assumption that all subjects have the same residual standard deviation leads to the distributional regression model. Such models can be fit in brms; also see the brms vignette, https://cran.r-project.org/web/packages/brms/vignettes/brms_distreg.html.

We are going to change our previous likelihood, so that the scale \(\sigma\) also has a group-level effect structure. We exponentiate \(\sigma\) to make sure that the negative adjustments do not cause \(\sigma\) to become negative.

\[\begin{equation} \begin{aligned} signal_n &\sim \mathit{Normal}(\alpha + u_{subj[n],1} + w_{item[n],1} + \\ & \hspace{2cm} c\_cloze_n \cdot (\beta + u_{subj[n],2}+ w_{item[n],2}), \sigma_n)\\ \sigma_n &= \exp(\sigma_\alpha + \sigma_{u_{subj[n]}}) \end{aligned} \end{equation}\]

We just need to add priors to our new parameters (that replace the old prior for \(\sigma\)). We set the prior to the intercept of the standard deviation, \(\sigma_\alpha\), to be similar to our previous \(\sigma\). For the variance component of \(\sigma\), \(\tau_{\sigma_u}\), we set rather uninformative hyperpriors. Recall that everything is exponentiated when it goes inside the likelihood; that is why we use \(\log(50)\) rather than \(50\) for the standard deviation of the prior for \(\sigma\).

\[\begin{equation} \begin{aligned} \sigma_\alpha &\sim \mathit{Normal}(0,\log(50))\\ \sigma_u &\sim \mathit{Normal}(0, \tau_{\sigma_u}) \\ \tau_{\sigma_u} &\sim \mathit{Normal}_+(0, 5) \end{aligned} \end{equation}\]

This model can be fit in brms using the internal function brmsformula() (or its shorter alias bf()). This is a powerful function that extends the formulas that we used so far allowing for setting a hierarchical regression to any parameter of a model. This will allow us to set a by-subject hierarchical structure to the parameter \(\sigma\). We also need to set new priors; these priors are identified by dpar = sigma.

prior_s <-

c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(normal(0, 20), class = sd),

prior(lkj(2), class = cor),

prior(normal(0, log(50)), class = Intercept, dpar = sigma),

prior(normal(0, 5),

class = sd,

group = subj,

dpar = sigma))

fit_N400_s <- brm(

brmsformula(n400 ~ c_cloze + (c_cloze | subj) + (c_cloze | item),

sigma ~ 1 + (1 | subj)),

prior = prior_s, data = df_eeg)Inspect the output below; notice that our estimate for the effect of cloze

remains very similar to that of the model fit_N400_sih.

Compare the two models’ estimates:

posterior_summary(fit_N400_sih, variable = "b_c_cloze")## Estimate Est.Error Q2.5 Q97.5

## b_c_cloze 2.31 0.695 0.924 3.63posterior_summary(fit_N400_s, variable = "b_c_cloze")## Estimate Est.Error Q2.5 Q97.5

## b_c_cloze 2.29 0.658 1.04 3.61Nonetheless, Figure 5.17 shows that the fit of the model with respect to the by-subject variability is much better than before. Furthermore, Figure 5.18 shows that the observed standard deviations for each subject are well inside the posterior predictive distributions. The code below produces Figure 5.17.

pp_check(fit_N400_s,

type = "dens_overlay_grouped",

ndraws = 100,

group = "subj") +

xlab("Signal in the N400 spatiotemporal window") +

theme(legend.position = "inside",

legend.position.inside = c(.5,.08))FIGURE 5.17: The gray density plots show 100 predicted distributions from a model that includes a hierarchical structure for \(\sigma\). The black density plots show the distribution of the average signal data for the 37 subjects in the experiment.

FIGURE 5.18: The gray lines show the distributions of posterior predicted standard deviations from a model that includes a hierarchical structure for \(\sigma\), and observed mean standard deviations by subject (black vertical lines).

The model fit_N400_s raises the question: how much structure should we add to our statistical model? Should we also assume that \(\sigma\) can vary by items, and also by our experimental manipulation? Should we also have a maximal model for \(\sigma\)? Unfortunately, there are no clear answers that apply to every situation. The amount of complexity that we can introduce in a statistical model depends on (i) the answers we are looking for (we should include parameters that represent what we want to estimate), (ii) the size of the data at hand (more complex models require more data; this could mean more observations within subjects and/or the number of subjects), (iii) our computing power (as the complexity increases, models take increasingly long to converge and require more computer power to finish the computations in a feasible time frame), and (iv) our domain knowledge.

Whether certain variance components should be included in a model also depends on whether there is evidence that they impact statistical inference (this could be investigated using model comparison techniques like Bayes factors). For example, it is well known that estimating group-level effects for the location parameter can have a strong influence on the test statistics for the corresponding population-level effect (Barr et al. 2013; Schad et al. 2021). Ultimately, all models are approximations (that’s in the best case; often, they are plainly wrong) and we need to think carefully about which aspects of our data we have to account and which aspects we can abstract away from.

In the context of cognitive modeling, McClelland (2009) argues that models should not focus on a every single detail of the process they intend to explain. In order to understand a model, it needs to be simple enough. However, McClelland (2009) warns us that one must bear in mind that oversimplification does have an impact on what we can conclude from our analysis: A simplification can limit the phenomena that a model addresses, or can even lead to incorrect predictions. There is a continuum between purely statistical models (e.g., a linear regression) and computational cognitive models. For example, we can define “hybrid” models such as the linear ballistic accumulator (Brown and Heathcote 2008; and see Nicenboim 2018 for an implementation in Stan), where a great deal of cognitive detail is sacrificed for tractability. The conclusions of McClelland (2009) apply to any type of model in cognitive science: “Simplification is essential, but it comes at a cost, and real understanding depends in part on understanding the effects of the simplification.”

5.3 A hierarchical log-normal model: The Stroop effect

Next, we illustrate some of the issues that arise with a log-normal likelihood in a hierarchical model. The data are from a Stroop task (Stroop 1935; for a review, see MacLeod 1991). We will analyze a subset of the data of 3337 subjects that participated in one variant of the Stroop task; this was part of a battery of tasks run in Ebersole et al. (2016).

For this variant of the Stroop task, subjects were presented with one word (“red,” “blue,” or “green”) at the center of the screen, written in either red, blue, or green color. In one third of the trials, the word matched the text color (“congruent” condition); in the remaining trials, it did not match (“incongruent” condition). Subjects were instructed to focus only on the color of the text and press 1 if the color was red, 2 if it was blue, and 3 if it was green. The incongruent condition is more difficult because subjects need to identify the color when it mismatches the word. For example, they might need to respond that the color is blue when the word “green” is written in blue; this is more challenging than the congruent condition, where the word “green” is written in green. This increased difficulty in the incongruent condition is known as the Stroop effect, which is extremely robust across task variations.

This task yields two measures: the accuracy of the decision made, and the time it took to respond. For the Stroop task, accuracy is usually almost at ceiling; to simplify the model, we will ignore accuracy. For cognitive models that incorporates accuracy and response times into a model to analyze these Stroop data, see Nicenboim (2018). Additionally, chapters 17 and 18 address response time and accuracy data.

5.4 Why fitting a Bayesian hierarchical model is worth the effort

Carrying out Bayesian data analysis clearly requires much more effort than fitting a frequentist model: we have to define priors, verify that our model works, and decide how to interpret the results. By comparison, fitting a linear mixed model using lme4 consists of only a single line of code. But there is a hidden cost to the relatively high speed furnished by functions such as lmer(). First, the model fit using lmer() or the like makes many assumptions, but they are hidden from the user. This is not a problem for the knowledgeable modeler, but very dangerous for the naive user. A second conceptual problem is that the way frequentist models are typically used is to answer a binary question: is the effect “significant” or not? Although frequentist models can quickly answer the question whether there is evidence against the null hypothesis, this often is the wrong question given the actual research problem. For discussion, see Vasishth and Gelman (2021).

Nevertheless, it is natural to ask why one should bother to go through all the trouble of fitting a Bayesian model. An important reason is the flexibility in model specification. The approach we have presented here can be used to extend essentially any parameter of any model. This includes popular uses, such as logistic and Poisson regressions, and also useful models that are relatively rarely used in cognitive science, such as multi-logistic regression (e.g., accuracy in some task with more than two answers), ordered logistic (e.g., ratings, Bürkner and Vuorre 2019), models with a shifted log-normal distribution (see the online exercise G.10.1 and chapter 18, which deals with a log-normal race mode, and see Nicenboim, Logačev, et al. 2016; Rouder 2005), and distributional regression models (as shown in section 5.2.6). By contrast, a frequentist model, although easy to fit quickly, forces the user to use an inflexible canned model, which may not necessarily make sense for their data.

This flexibility allows us also to go beyond the statistical models discussed before, and to develop complex hierarchical computational process models that are tailored to specific phenomena. An example is computational cognitive models, which can be extended hierarchically in a straightforward way; see Lee (2011b) and Lee and Wagenmakers (2014). This flexibility is possible because, as we have seen with distributional regression models in section 5.2.6, any parameter can have a group-level effect structure. Some examples of hierarchical computational cognitive models in psycholinguistics are Logačev and Vasishth (2016), Nicenboim and Vasishth (2018), Vasishth, Chopin, et al. (2017), Vasishth, Jäger, and Nicenboim (2017), Lissón et al. (2021), Logačev and Dokudan (2021), Paape et al. (2021), and Paape and Vasishth (2022). The hierarchical Bayesian modeling approach can even be extended to process models that cannot be expressed as a likelihood function, although in such cases one may have to write one’s own sampler; for an example from psycholinguistics, see Yadav et al. (2022) and Yadav et al. (2023). In chapters 15-18, we discuss and implement in Stan some relatively simple computational cognitive models.

5.5 Summary

This chapter presents two very commonly used classes of hierarchical model: those with normal and log-normal likelihoods. We saw several common variants of such models: varying intercepts, varying intercepts and varying slopes with or without a correlation parameter, and crossed random effects for subjects and items. We also experienced the flexibility of the Stan modeling framework through the example of a model that assumes a different residual standard deviation for each subject.

5.6 Further reading

Chapter 5 of Gelman et al. (2014) and O’Hagan and Forster (2004) provide rather technical but comprehensive treatments of exchangeability in Bayesian hierarchical models. Bernardo and Smith (2009) is a brief but useful article explaining exchangeability, and Lunn et al. (2012) also has a helpful discussion that we have drawn on in this chapter. Gelman and Hill (2007) is a comprehensive treatment of hierarchical modeling, although it uses WinBUGS. Yarkoni (2020) discusses the importance of modeling variability in variables that researchers clearly intend to generalize over (e.g., stimuli, tasks, or research sites), and how under-specification of group-level effects imposes strong constraints on the generalizability of results. Sorensen, Hohenstein, and Vasishth (2016) provides an introduction, using Stan, to the Laird-Ware style matrix formulation (Laird and Ware 1982) of hierarchical models; this formulation has the advantage of flexibility and efficiency when specifying models in Stan syntax.

References

Barr, Dale J., Roger P. Levy, Christoph Scheepers, and Harry J. Tily. 2013. “Random Effects Structure for Confirmatory Hypothesis Testing: Keep It Maximal.” Journal of Memory and Language 68 (3): 255–78.

Bates, Douglas M., Martin Mächler, Ben Bolker, and Steve Walker. 2015. “Fitting Linear Mixed-Effects Models Using lme4.” Journal of Statistical Software 67 (1): 1–48. https://doi.org/10.18637/jss.v067.i01.

Bernardo, José M., and Adrian F. M. Smith. 2009. Bayesian Theory. Vol. 405. John Wiley & Sons.

Brown, Scott D., and Andrew Heathcote. 2005. “A Ballistic Model of Choice Response Time.” Psychological Review 112 (1): 117.

2008. “The Simplest Complete Model of Choice Response Time: Linear Ballistic Accumulation.” Cognitive Psychology 57 (3): 153–78. https://doi.org/10.1016/j.cogpsych.2007.12.002.Bürkner, Paul-Christian, and Matti Vuorre. 2019. “Ordinal Regression Models in Psychology: A Tutorial.” Advances in Methods and Practices in Psychological Science 2 (1): 77–101. https://doi.org/https://doi.org/10.1177/2515245918823199.

de Finetti, Bruno. 1931. “Funcione Caratteristica Di Un Fenomeno Aleatorio.” Atti Dela Reale Accademia Nazionale Dei Lincei, Serie 6. Memorie, Classe Di Scienze Fisiche, Mathematice E Naturale 4: 251–99.

DeLong, Katherine A., Thomas P. Urbach, and Marta Kutas. 2005. “Probabilistic Word Pre-Activation During Language Comprehension Inferred from Electrical Brain Activity.” Nature Neuroscience 8 (8): 1117–21. https://doi.org/10.1038/nn1504.

Ebersole, Charles R., Olivia E. Atherton, Aimee L. Belanger, Hayley M. Skulborstad, Jill M. Allen, Jonathan B. Banks, Erica Baranski, et al. 2016. “Many Labs 3: Evaluating Participant Pool Quality Across the Academic Semester via Replication.” Journal of Experimental Social Psychology 67: 68–82. https://doi.org/https://doi.org/10.1016/j.jesp.2015.10.012.

Frank, Stefan L., Leun J. Otten, Giulia Galli, and Gabriella Vigliocco. 2015. “The ERP Response to the Amount of Information Conveyed by Words in Sentences.” Brain and Language 140: 1–11. https://doi.org/10.1016/j.bandl.2014.10.006.

Gelman, Andrew, and John B. Carlin. 2014. “Beyond Power Calculations: Assessing Type S (Sign) and Type M (Magnitude) Errors.” Perspectives on Psychological Science 9 (6): 641–51. https://doi.org/https://doi.org/10.1177/1745691614551642.

Gelman, Andrew, John B. Carlin, Hal S. Stern, David B. Dunson, Aki Vehtari, and Donald B. Rubin. 2014. Bayesian Data Analysis. Third Edition. Boca Raton, FL: Chapman; Hall/CRC Press.

Gelman, Andrew, and Jennifer Hill. 2007. Data Analysis Using Regression and Multilevel/Hierarchical Models. Cambridge University Press.

Kutas, Marta, and Kara D. Federmeier. 2011. “Thirty Years and Counting: Finding Meaning in the N400 Componentof the Event-Related Brain Potential (ERP).” Annual Review of Psychology 62 (1): 621–47. https://doi.org/10.1146/annurev.psych.093008.131123.

Kutas, Marta, and Steven A. Hillyard. 1980. “Reading Senseless Sentences: Brain Potentials Reflect Semantic Incongruity.” Science 207 (4427): 203–5. https://doi.org/10.1126/science.7350657.

Kutas, Marta, and Steven A. 1984. “Brain Potentials During Reading Reflect Word Expectancy and Semantic Association.” Nature 307 (5947): 161–63. https://doi.org/10.1038/307161a0.

Laird, Nan M., and James H. Ware. 1982. “Random-Effects Models for Longitudinal Data.” Biometrics, 963–74. https://doi.org/https://doi.org/10.2307/2529876.

Lee, Michael D, ed. 2011b. “How Cognitive Modeling Can Benefit from Hierarchical Bayesian Models.” Journal of Mathematical Psychology 55 (1): 1–7. https://doi.org/10.1016/j.jmp.2010.08.013.

Lee, Michael D, and Eric-Jan Wagenmakers. 2014. Bayesian Cognitive Modeling: A Practical Course. Cambridge University Press.

Lissón, Paula, Dorothea Pregla, Bruno Nicenboim, Dario Paape, Mick van het Nederend, Frank Burchert, Nicole Stadie, David Caplan, and Shravan Vasishth. 2021. “A Computational Evaluation of Two Models of Retrieval Processes in Sentence Processing in Aphasia.” Cognitive Science 45 (4): e12956. https://onlinelibrary.wiley.com/doi/full/10.1111/cogs.12956.

Logačev, Pavel, and Noyan Dokudan. 2021. “A Multinomial Processing Tree Model of RC Attachment.” In Proceedings of the Workshop on Cognitive Modeling and Computational Linguistics, 39–47. Online: Association for Computational Linguistics. https://www.aclweb.org/anthology/2021.cmcl-1.4.

Logačev, Pavel, and Shravan Vasishth. 2016. “A Multiple-Channel Model of Task-Dependent Ambiguity Resolution in Sentence Comprehension.” Cognitive Science 40 (2): 266–98. https://doi.org/10.1111/cogs.12228.

Lunn, David J., Chris Jackson, David J. Spiegelhalter, Nichola G. Best, and Andrew Thomas. 2012. The BUGS Book: A Practical Introduction to Bayesian Analysis. Vol. 98. CRC Press.

MacLeod, Colin M. 1991. “Half a Century of Research on the Stroop Effect: An Integrative Review.” Psychological Bulletin 109 (2): 163. https://doi.org/https://doi.org/10.1037/0033-2909.109.2.163.

McClelland, James L. 2009. “The Place of Modeling in Cognitive Science.” Topics in Cognitive Science 1 (1): 11–38. https://doi.org/10.1111/j.1756-8765.2008.01003.x.

Nicenboim, Bruno. 2018. “The Implementation of a Model of Choice: The (Truncated) Linear Ballistic Accumulator.” In StanCon. Aalto University, Helsinki, Finland. https://doi.org/10.5281/zenodo.1465990.

Nicenboim, Bruno, Pavel Logačev, Carolina Gattei, and Shravan Vasishth. 2016. “When High-Capacity Readers Slow down and Low-Capacity Readers Speed up: Working Memory and Locality Effects.” Frontiers in Psychology 7 (280). https://doi.org/10.3389/fpsyg.2016.00280.

Nicenboim, Bruno, and Shravan Vasishth. 2018. “Models of Retrieval in Sentence Comprehension: A Computational Evaluation Using Bayesian Hierarchical Modeling.” Journal of Memory and Language 99: 1–34. https://doi.org/10.1016/j.jml.2017.08.004.

Nicenboim, Bruno, Shravan Vasishth, and Frank Rösler. 2020. “Are Words Pre-Activated Probabilistically During Sentence Comprehension? Evidence from New Data and a Bayesian Random-Effects Meta-Analysis Using Publicly Available Data.” Neuropsychologia 142. https://doi.org/10.1016/j.neuropsychologia.2020.107427.

Nieuwland, Mante S., Stephen Politzer-Ahles, Evelien Heyselaar, Katrien Segaert, Emily Darley, Nina Kazanina, Sarah Von Grebmer Zu Wolfsthurn, et al. 2018. “Large-Scale Replication Study Reveals a Limit on Probabilistic Prediction in Language Comprehension.” eLife 7. https://doi.org/10.7554/eLife.33468.

O’Hagan, Anthony, and Jonathan J. Forster. 2004. “Kendall’s Advanced Theory of Statistics, Vol. 2B: Bayesian Inference.” Wiley.

Paape, Dario, Serine Avetisyan, Sol Lago, and Shravan Vasishth. 2021. “Modeling Misretrieval and Feature Substitution in Agreement Attraction: A Computational Evaluation.” Cognitive Science 45 (8). https://doi.org/https://doi.org/10.1111/cogs.13019.

Paape, Dario, and Shravan Vasishth. 2022. “Estimating the True Cost of Garden-Pathing: A Computational Model of Latent Cognitive Processes.” Cognitive Science 46 (8): e13186. https://doi.org/https://doi.org/10.1111/cogs.13186.

Picton, T. W., S. Bentin, P. Berg, E. Donchin, Steven A. Hillyard, R. Johnson J. R., G. A. Miller, et al. 2000. “Guidelines for Using Human Event-Related Potentials to Study Cognition: Recording Standards and Publication Criteria.” Psychophysiology 37 (2): 127–52. https://doi.org/10.1111/1469-8986.3720127.

Pinheiro, José C, and Douglas M. Bates. 2000. Mixed-Effects Models in S and S-PLUS. New York: Springer-Verlag.

Rouder, Jeffrey N. 2005. “Are Unshifted Distributional Models Appropriate for Response Time?” Psychometrika 70 (2): 377–81. https://doi.org/10.1007/s11336-005-1297-7.

Schad, Daniel J., Bruno Nicenboim, Paul-Christian Bürkner, Michael J. Betancourt, and Shravan Vasishth. 2021. “Workflow Techniques for the Robust Use of Bayes Factors.” arXiv Preprint arXiv:2103.08744.

Sorensen, Tanner, Sven Hohenstein, and Shravan Vasishth. 2016. “Bayesian Linear Mixed Models Using Stan: A Tutorial for Psychologists, Linguists, and Cognitive Scientists.” Quantitative Methods for Psychology 12 (3): 175–200.

Spiegelhalter, David J., Keith R. Abrams, and Jonathan P. Myles. 2004. Bayesian Approaches to Clinical Trials and Health-Care Evaluation. Vol. 13. John Wiley & Sons.

Stroop, J. Ridley. 1935. “Studies of Interference in Serial Verbal Reactions.” Journal of Experimental Psychology 18 (6): 643.

Vasishth, Shravan, Nicolas Chopin, Robin Ryder, and Bruno Nicenboim. 2017. “Modelling Dependency Completion in Sentence Comprehension as a Bayesian Hierarchical Mixture Process: A Case Study Involving Chinese Relative Clauses.” In Proceedings of Cognitive Science Conference. London, UK. https://arxiv.org/abs/1702.00564v2.

Vasishth, Shravan, and Felix Engelmann. 2022. Sentence Comprehension as a Cognitive Process: A Computational Approach. Cambridge, UK: Cambridge University Press. https://books.google.de/books?id=6KZKzgEACAAJ.

Vasishth, Shravan, and Andrew Gelman. 2021. “How to Embrace Variation and Accept Uncertainty in Linguistic and Psycholinguistic Data Analysis.” Linguistics 59 (5): 1311–42. https://doi.org/10.1515/ling-2019-0051.

Vasishth, Shravan, Lena A. Jäger, and Bruno Nicenboim. 2017. “Feature overwriting as a finite mixture process: Evidence from comprehension data.” In Proceedings of MathPsych/ICCM Conference. Warwick, UK. https://arxiv.org/abs/1703.04081.

Yadav, Himanshu, Dario Paape, Garrett Smith, Brian W. Dillon, and Shravan Vasishth. 2022. “Individual Differences in Cue Weighting in Sentence Comprehension: An evaluation using Approximate Bayesian Computation.” Open Mind. https://doi.org/https://doi.org/10.1162/opmi_a_00052.

Yadav, Himanshu, Garrett Smith, Sebastian Reich, and Shravan Vasishth. 2023. “Number Feature Distortion Modulates Cue-Based Retrieval in Reading.” Journal of Memory and Language 129. https://doi.org/10.1016/j.jml.2022.104400.

Yarkoni, Tal. 2020. “The Generalizability Crisis.” Behavioral and Brain Sciences, 1–37. https://doi.org/10.1017/S0140525X20001685.

For simplicity, we assume that they share the same standard deviation.↩︎

If we don’t remove the intercept, that is, if we use the formula

n400 ~ 1 + factor(subj) + c_cloze:factor(subj), withfactor(subj)we are going estimate the deviation between the first subject and each of the other subjects. This is because the default contrast coding for the subjects, treated as a population-level or fixed effect, is treatment contrasts. See chapters 6 and 7.↩︎The intercept adjustment is often called \(u_0\) in statistics books, where the intercept might be called \(\alpha\) (or sometimes also \(\beta_0\)), and thus \(u_1\) refers to the adjustment to the slope. However, in this book, we start the indexing with 1 to be consistent with the Stan language.↩︎

Another potential source of confusion here is that hyperparameters is also used in the machine learning literature with a different meaning.↩︎

One could in theory keep going deeper and deeper, defining hyper-hyperpriors etc., but the model would quickly become impossible to fit.↩︎

This is because an LKJ correlation distribution with a large \(\eta\) corresponds to a correlation matrix with values close to zero in the lower and upper triangles.↩︎